6 Next Steps¶

So far, you’ve learned how to create a basic OpenXR application: how to initialize and shut down an OpenXR instance and session, how to connect a graphics API to receive rendering events, and how to poll your runtime for interactions. The next steps are up to you but in these chapter you’ll find a few suggestions.

Note: This chapter does not follow a continuous path to a goal, but instead presents a few ideas for the reader to explore. If you are using the OpenXR-Tutorials GitHub Repository only chapters 1 to 5 are included in the CMake build from the root of the repository. There is a Chapter6_1_Multiview folder which contains a separate ‘mini-CMake build’ for code reference and testing.

6.1 Multiview rendering¶

Stereoscopic rendering usually involves drawing two very similar views of the same scene, with only a slight difference in perspective due to the separation of the left and right eye positions. Multiview rendering provides a great saving, particularly of CPU time, as we can use just one set of draw calls to render both views. The benefits can also extend to the GPU, depending on the implementation. In certain cases, it’s possible that the Input Assembler only needs to be invoked once for all views, instead of repeating non-view dependent work redundantly.

Multiview or View Instancing can be used for stereo rendering by creating one XrSwapchain that contains two array images.

6.1.1 CMake and Downloads¶

This chapter is based on the code from chapter 4. So, Create a Chapter6 folder in the workspace directory and copy across the contents of your Chapter4 folder.

In the workspace directory, update the CMakeLists.txt by adding the following CMake code to the end of the file:

add_subdirectory(Chapter6)

There are some changes in Chapter 6 to the implementation of GraphicsAPI. Update the Common folder with the zip archive below.

Chapter6_1_Multiview_Common_Vulkan.zip

For multiview there are some changes to the shaders. Create a new ShaderMultiview folder in the workspace directory and download the new shaders.

Update the Chapter6/CMakeLists.txt as follows. First, we update the project name:

cmake_minimum_required(VERSION 3.28.3)

set(PROJECT_NAME OpenXRTutorialChapter6)

project("${PROJECT_NAME}")

Next, we update the shaders file paths:

set(VK_SHADERS "../ShadersMultiview/VertexShader_VK_MV.glsl"

"../ShadersMultiview/PixelShader_VK_MV.glsl"

)

Just after where we link the openxr_loader, we add in the XR_TUTORIAL_ENABLE_MULTIVIEW definition to enable multiview in GraphicsAPI class.

target_compile_definitions(

${PROJECT_NAME} PUBLIC XR_TUTORIAL_ENABLE_MULTIVIEW

)

Finally, we update the CMake section where we compile the shaders:

# Vulkan GLSL

set(SHADER_DEST "${CMAKE_CURRENT_BINARY_DIR}")

if(Vulkan_FOUND)

include(glsl_shader)

set_source_files_properties(

../ShadersMultiview/VertexShader_VK_MV.glsl PROPERTIES ShaderType

"vert"

)

set_source_files_properties(

../ShadersMultiview/PixelShader_VK_MV.glsl PROPERTIES ShaderType

"frag"

)

foreach(FILE ${VK_SHADERS})

get_filename_component(FILE_WE ${FILE} NAME_WE)

get_source_file_property(shadertype ${FILE} ShaderType)

glsl_spv_shader(

INPUT

"${CMAKE_CURRENT_SOURCE_DIR}/${FILE}"

OUTPUT

"${SHADER_DEST}/${FILE_WE}.spv"

STAGE

${shadertype}

ENTRY_POINT

main

TARGET_ENV

vulkan1.0

)

# Make our project depend on these files

target_sources(

${PROJECT_NAME} PRIVATE "${SHADER_DEST}/${FILE_WE}.spv"

)

endforeach()

endif()

6.1.2 Update Main.cpp¶

First, we update the type of members for the color and depth swapchains to be just a single SwapchainInfo struct containing a single XrSwapchain.

SwapchainInfo m_colorSwapchainInfo = {};

SwapchainInfo m_depthSwapchainInfo = {};

Now in the CreateSwapchains() method after enumerating the swapchain formats, we check that the two views for stereo rendering are coherent in size. This is vital as the swapchain image layer for each view must match. We also set up the viewCount variable, which just the number of view in the XrViewConfigurationType.

bool coherentViews = m_viewConfiguration == XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO;

for (const XrViewConfigurationView &viewConfigurationView : m_viewConfigurationViews) {

// Check the current view size against the first view.

coherentViews |= m_viewConfigurationViews[0].recommendedImageRectWidth == viewConfigurationView.recommendedImageRectWidth;

coherentViews |= m_viewConfigurationViews[0].recommendedImageRectHeight == viewConfigurationView.recommendedImageRectHeight;

}

if (!coherentViews) {

XR_TUT_LOG_ERROR("The views are not coherent. Unable to create a single Swapchain.");

DEBUG_BREAK;

}

const XrViewConfigurationView &viewConfigurationView = m_viewConfigurationViews[0];

uint32_t viewCount = static_cast<uint32_t>(m_viewConfigurationViews.size());

Next, we remove the std::vector<> resizes and the loop and create a single color and single depth XrSwapchain for all the views. This is done by setting the arraySize of our XrSwapchainCreateInfo to viewCount, which for stereo will be 2. Update your swapchain creation code.

// Create a color and depth swapchain, and their associated image views.

// Fill out an XrSwapchainCreateInfo structure and create an XrSwapchain.

// Color.

XrSwapchainCreateInfo swapchainCI{XR_TYPE_SWAPCHAIN_CREATE_INFO};

swapchainCI.createFlags = 0;

swapchainCI.usageFlags = XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_COLOR_ATTACHMENT_BIT;

swapchainCI.format = m_graphicsAPI->SelectColorSwapchainFormat(formats); // Use GraphicsAPI to select the first compatible format.

swapchainCI.sampleCount = viewConfigurationView.recommendedSwapchainSampleCount; // Use the recommended values from the XrViewConfigurationView.

swapchainCI.width = viewConfigurationView.recommendedImageRectWidth;

swapchainCI.height = viewConfigurationView.recommendedImageRectHeight;

swapchainCI.faceCount = 1;

swapchainCI.arraySize = viewCount;

swapchainCI.mipCount = 1;

OPENXR_CHECK(xrCreateSwapchain(m_session, &swapchainCI, &m_colorSwapchainInfo.swapchain), "Failed to create Color Swapchain");

m_colorSwapchainInfo.swapchainFormat = swapchainCI.format; // Save the swapchain format for later use.

// Depth.

swapchainCI.createFlags = 0;

swapchainCI.usageFlags = XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT;

swapchainCI.format = m_graphicsAPI->SelectDepthSwapchainFormat(formats); // Use GraphicsAPI to select the first compatible format.

swapchainCI.sampleCount = viewConfigurationView.recommendedSwapchainSampleCount; // Use the recommended values from the XrViewConfigurationView.

swapchainCI.width = viewConfigurationView.recommendedImageRectWidth;

swapchainCI.height = viewConfigurationView.recommendedImageRectHeight;

swapchainCI.faceCount = 1;

swapchainCI.arraySize = viewCount;

swapchainCI.mipCount = 1;

OPENXR_CHECK(xrCreateSwapchain(m_session, &swapchainCI, &m_depthSwapchainInfo.swapchain), "Failed to create Depth Swapchain");

m_depthSwapchainInfo.swapchainFormat = swapchainCI.format; // Save the swapchain format for later use.

Update the calls to xrEnumerateSwapchainImages with new member names.

// Get the number of images in the color/depth swapchain and allocate Swapchain image data via GraphicsAPI to store the returned array.

uint32_t colorSwapchainImageCount = 0;

OPENXR_CHECK(xrEnumerateSwapchainImages(m_colorSwapchainInfo.swapchain, 0, &colorSwapchainImageCount, nullptr), "Failed to enumerate Color Swapchain Images.");

XrSwapchainImageBaseHeader *colorSwapchainImages = m_graphicsAPI->AllocateSwapchainImageData(m_colorSwapchainInfo.swapchain, GraphicsAPI::SwapchainType::COLOR, colorSwapchainImageCount);

OPENXR_CHECK(xrEnumerateSwapchainImages(m_colorSwapchainInfo.swapchain, colorSwapchainImageCount, &colorSwapchainImageCount, colorSwapchainImages), "Failed to enumerate Color Swapchain Images.");

uint32_t depthSwapchainImageCount = 0;

OPENXR_CHECK(xrEnumerateSwapchainImages(m_depthSwapchainInfo.swapchain, 0, &depthSwapchainImageCount, nullptr), "Failed to enumerate Depth Swapchain Images.");

XrSwapchainImageBaseHeader *depthSwapchainImages = m_graphicsAPI->AllocateSwapchainImageData(m_depthSwapchainInfo.swapchain, GraphicsAPI::SwapchainType::DEPTH, depthSwapchainImageCount);

OPENXR_CHECK(xrEnumerateSwapchainImages(m_depthSwapchainInfo.swapchain, depthSwapchainImageCount, &depthSwapchainImageCount, depthSwapchainImages), "Failed to enumerate Depth Swapchain Images.");

We will now create image views that encompass the two subresources - one layer per eye view. We update the ImageViewCreateInfo::view to TYPE_2D_ARRAY and set the ImageViewCreateInfo::layerCount to viewCount. Update your swapchain image view creation code.

// Per image in the swapchains, fill out a GraphicsAPI::ImageViewCreateInfo structure and create a color/depth image view.

for (uint32_t j = 0; j < colorSwapchainImageCount; j++) {

GraphicsAPI::ImageViewCreateInfo imageViewCI;

imageViewCI.image = m_graphicsAPI->GetSwapchainImage(m_colorSwapchainInfo.swapchain, j);

imageViewCI.type = GraphicsAPI::ImageViewCreateInfo::Type::RTV;

imageViewCI.view = GraphicsAPI::ImageViewCreateInfo::View::TYPE_2D_ARRAY;

imageViewCI.format = m_colorSwapchainInfo.swapchainFormat;

imageViewCI.aspect = GraphicsAPI::ImageViewCreateInfo::Aspect::COLOR_BIT;

imageViewCI.baseMipLevel = 0;

imageViewCI.levelCount = 1;

imageViewCI.baseArrayLayer = 0;

imageViewCI.layerCount = viewCount;

m_colorSwapchainInfo.imageViews.push_back(m_graphicsAPI->CreateImageView(imageViewCI));

}

for (uint32_t j = 0; j < depthSwapchainImageCount; j++) {

GraphicsAPI::ImageViewCreateInfo imageViewCI;

imageViewCI.image = m_graphicsAPI->GetSwapchainImage(m_depthSwapchainInfo.swapchain, j);

imageViewCI.type = GraphicsAPI::ImageViewCreateInfo::Type::DSV;

imageViewCI.view = GraphicsAPI::ImageViewCreateInfo::View::TYPE_2D_ARRAY;

imageViewCI.format = m_depthSwapchainInfo.swapchainFormat;

imageViewCI.aspect = GraphicsAPI::ImageViewCreateInfo::Aspect::DEPTH_BIT;

imageViewCI.baseMipLevel = 0;

imageViewCI.levelCount = 1;

imageViewCI.baseArrayLayer = 0;

imageViewCI.layerCount = viewCount;

m_depthSwapchainInfo.imageViews.push_back(m_graphicsAPI->CreateImageView(imageViewCI));

}

In the DestroySwapchains(), we again remove the loop and update the member names used.

// Destroy the color and depth image views from GraphicsAPI.

for (void*& imageView : m_colorSwapchainInfo.imageViews) {

m_graphicsAPI->DestroyImageView(imageView);

}

for (void*& imageView : m_depthSwapchainInfo.imageViews) {

m_graphicsAPI->DestroyImageView(imageView);

}

// Free the Swapchain Image Data.

m_graphicsAPI->FreeSwapchainImageData(m_colorSwapchainInfo.swapchain);

m_graphicsAPI->FreeSwapchainImageData(m_depthSwapchainInfo.swapchain);

// Destroy the swapchains.

OPENXR_CHECK(xrDestroySwapchain(m_colorSwapchainInfo.swapchain), "Failed to destroy Color Swapchain");

OPENXR_CHECK(xrDestroySwapchain(m_depthSwapchainInfo.swapchain), "Failed to destroy Depth Swapchain");

With the swapchains correctly set up, we now need to update our resources for rendering. First, we update our CameraConstants struct to contain two viewProj and two modelViewProj matrices as arrays. The two matrices are unique to each eye view.

struct CameraConstants {

XrMatrix4x4f viewProj[2];

XrMatrix4x4f modelViewProj[2];

XrMatrix4x4f model;

XrVector4f color;

XrVector4f pad1;

XrVector4f pad2;

XrVector4f pad3;

};

CameraConstants cameraConstants;

XrVector4f normals[6] = {

{1.00f, 0.00f, 0.00f, 0},

{-1.00f, 0.00f, 0.00f, 0},

{0.00f, 1.00f, 0.00f, 0},

{0.00f, -1.00f, 0.00f, 0},

{0.00f, 0.00f, 1.00f, 0},

{0.00f, 0.0f, -1.00f, 0}};

Because of the larger size of the camera constant/uniform buffer, we need to align the size of the structure, so that it’s compatibile with the graphics API. We use GraphicsAPI::AlignSizeForUniformBuffer() to align up the size when creating the buffer.

m_uniformBuffer_Camera = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::UNIFORM, 0, m_graphicsAPI->AlignSizeForUniformBuffer(sizeof(CameraConstants)) * numberOfCuboids, nullptr});

m_uniformBuffer_Normals = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::UNIFORM, 0, sizeof(normals), &normals});

Next, we update the shader filepaths:

if (m_apiType == VULKAN) {

std::vector<char> vertexSource = ReadBinaryFile("VertexShader_VK_MV.spv");

m_vertexShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::VERTEX, vertexSource.data(), vertexSource.size()});

std::vector<char> fragmentSource = ReadBinaryFile("PixelShader_VK_MV.spv");

m_fragmentShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::FRAGMENT, fragmentSource.data(), fragmentSource.size()});

}

Finally, we update the PipelineCreateInfo struct with the updated member names and assign a new member PipelineCreateInfo::viewMask the value 0b11, which is binary 3. This last member is used by some graphics APIs to set up the pipeline correctly. Each set bit refers to a single view that the shaders should write to. It’s possible to mask off certain views for rendering i.e. 0b0101 would only render to the first and third views of a possible four view configuration.

GraphicsAPI::PipelineCreateInfo pipelineCI;

pipelineCI.shaders = {m_vertexShader, m_fragmentShader};

pipelineCI.vertexInputState.attributes = {{0, 0, GraphicsAPI::VertexType::VEC4, 0, "TEXCOORD"}};

pipelineCI.vertexInputState.bindings = {{0, 0, 4 * sizeof(float)}};

pipelineCI.inputAssemblyState = {GraphicsAPI::PrimitiveTopology::TRIANGLE_LIST, false};

pipelineCI.rasterisationState = {false, false, GraphicsAPI::PolygonMode::FILL, GraphicsAPI::CullMode::BACK, GraphicsAPI::FrontFace::COUNTER_CLOCKWISE, false, 0.0f, 0.0f, 0.0f, 1.0f};

pipelineCI.multisampleState = {1, false, 1.0f, 0xFFFFFFFF, false, false};

pipelineCI.depthStencilState = {true, true, GraphicsAPI::CompareOp::LESS_OR_EQUAL, false, false, {}, {}, 0.0f, 1.0f};

pipelineCI.colorBlendState = {false, GraphicsAPI::LogicOp::NO_OP, {{true, GraphicsAPI::BlendFactor::SRC_ALPHA, GraphicsAPI::BlendFactor::ONE_MINUS_SRC_ALPHA, GraphicsAPI::BlendOp::ADD, GraphicsAPI::BlendFactor::ONE, GraphicsAPI::BlendFactor::ZERO, GraphicsAPI::BlendOp::ADD, (GraphicsAPI::ColorComponentBit)15}}, {0.0f, 0.0f, 0.0f, 0.0f}};

pipelineCI.colorFormats = {m_colorSwapchainInfo.swapchainFormat};

pipelineCI.depthFormat = m_depthSwapchainInfo.swapchainFormat;

pipelineCI.layout = {{0, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX},

{1, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX},

{2, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::FRAGMENT}};

pipelineCI.viewMask = 0b11;

m_pipeline = m_graphicsAPI->CreatePipeline(pipelineCI);

After the swapchain and resource creation, we need to update our rendering code. In RenderCuboid(), we do the same matrix multiplication for both eye views, and use GraphicsAPI::AlignSizeForUniformBuffer() to calculate the correct offset in to buffer containing the constant/uniform buffers. The DrawIndexed() call is modified so that for D3D11 only we do instance rendering.

XrMatrix4x4f_CreateTranslationRotationScale(&cameraConstants.model, &pose.position, &pose.orientation, &scale);

const uint32_t viewCount = 2; // This will only work for stereo rendering.

for (uint32_t i = 0; i < viewCount; i++) {

XrMatrix4x4f_Multiply(&cameraConstants.modelViewProj[i], &cameraConstants.viewProj[i], &cameraConstants.model);

}

cameraConstants.color = {color.x, color.y, color.z, 1.0};

size_t offsetCameraUB = m_graphicsAPI->AlignSizeForUniformBuffer(sizeof(CameraConstants)) * renderCuboidIndex;

m_graphicsAPI->SetPipeline(m_pipeline);

m_graphicsAPI->SetBufferData(m_uniformBuffer_Camera, offsetCameraUB, sizeof(CameraConstants), &cameraConstants);

m_graphicsAPI->SetDescriptor({0, m_uniformBuffer_Camera, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX, false, offsetCameraUB, sizeof(CameraConstants)});

m_graphicsAPI->SetDescriptor({1, m_uniformBuffer_Normals, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX, false, 0, sizeof(normals)});

m_graphicsAPI->UpdateDescriptors();

m_graphicsAPI->SetVertexBuffers(&m_vertexBuffer, 1);

m_graphicsAPI->SetIndexBuffer(m_indexBuffer);

m_graphicsAPI->DrawIndexed(36, m_apiType == D3D11 ? 2 : 1); // For D3D11, use instanced rendering for multiview.

renderCuboidIndex++;

In RenderLayer(), there’s no need to repeat the rendering code per eye view; instead, we call xrAcquireSwapchainImage and xrWaitSwapchainImage for both the color and depth swapchains to get the next 2D array image from them. We call xrReleaseSwapchainImage at the end of the XR frame for both the color and depth swapchains. These single color and depth swapchains neatly encapsulate the two eye views and simplified the rendering code by removing the ‘per eye’ loop.

We are still required to submit an XrCompositionLayerProjectionView structure for each view in the system, but in the XrSwapchainSubImage we can set the imageArrayIndex to specify which layer of the swapchain image we wish to associate with that view. So in the case of stereo rendering, it would be 0 for left and 1 for right eye views. We attach our 2D array image as a render target/color attachment for the pixel/fragment shader to write to. In a for loop, we iterate through each XrView and create unique view and projection matrices. Update the rendering code in RenderLayer().

1// Locate the views from the view configuration within the (reference) space at the display time.

2std::vector<XrView> views(m_viewConfigurationViews.size(), {XR_TYPE_VIEW});

3

4XrViewState viewState{XR_TYPE_VIEW_STATE}; // Will contain information on whether the position and/or orientation is valid and/or tracked.

5XrViewLocateInfo viewLocateInfo{XR_TYPE_VIEW_LOCATE_INFO};

6viewLocateInfo.viewConfigurationType = m_viewConfiguration;

7viewLocateInfo.displayTime = renderLayerInfo.predictedDisplayTime;

8viewLocateInfo.space = m_localSpace;

9uint32_t viewCount = 0;

10XrResult result = xrLocateViews(m_session, &viewLocateInfo, &viewState, static_cast<uint32_t>(views.size()), &viewCount, views.data());

11if (result != XR_SUCCESS) {

12 XR_TUT_LOG("Failed to locate Views.");

13 return false;

14}

15

16// Resize the layer projection views to match the view count. The layer projection views are used in the layer projection.

17renderLayerInfo.layerProjectionViews.resize(viewCount, {XR_TYPE_COMPOSITION_LAYER_PROJECTION_VIEW});

18

19// Acquire and wait for an image from the swapchains.

20// Get the image index of an image in the swapchains.

21// The timeout is infinite.

22uint32_t colorImageIndex = 0;

23uint32_t depthImageIndex = 0;

24XrSwapchainImageAcquireInfo acquireInfo{XR_TYPE_SWAPCHAIN_IMAGE_ACQUIRE_INFO};

25OPENXR_CHECK(xrAcquireSwapchainImage(m_colorSwapchainInfo.swapchain, &acquireInfo, &colorImageIndex), "Failed to acquire Image from the Color Swapchian");

26OPENXR_CHECK(xrAcquireSwapchainImage(m_depthSwapchainInfo.swapchain, &acquireInfo, &depthImageIndex), "Failed to acquire Image from the Depth Swapchian");

27

28XrSwapchainImageWaitInfo waitInfo = {XR_TYPE_SWAPCHAIN_IMAGE_WAIT_INFO};

29waitInfo.timeout = XR_INFINITE_DURATION;

30OPENXR_CHECK(xrWaitSwapchainImage(m_colorSwapchainInfo.swapchain, &waitInfo), "Failed to wait for Image from the Color Swapchain");

31OPENXR_CHECK(xrWaitSwapchainImage(m_depthSwapchainInfo.swapchain, &waitInfo), "Failed to wait for Image from the Depth Swapchain");

32

33// Get the width and height and construct the viewport and scissors.

34const uint32_t &width = m_viewConfigurationViews[0].recommendedImageRectWidth;

35const uint32_t &height = m_viewConfigurationViews[0].recommendedImageRectHeight;

36GraphicsAPI::Viewport viewport = {0.0f, 0.0f, (float)width, (float)height, 0.0f, 1.0f};

37GraphicsAPI::Rect2D scissor = {{(int32_t)0, (int32_t)0}, {width, height}};

38float nearZ = 0.05f;

39float farZ = 100.0f;

40

41// Fill out the XrCompositionLayerProjectionView structure specifying the pose and fov from the view.

42// This also associates the swapchain image with this layer projection view.

43// Per view in the view configuration:

44for (uint32_t i = 0; i < viewCount; i++) {

45 renderLayerInfo.layerProjectionViews[i] = { XR_TYPE_COMPOSITION_LAYER_PROJECTION_VIEW };

46 renderLayerInfo.layerProjectionViews[i].pose = views[i].pose;

47 renderLayerInfo.layerProjectionViews[i].fov = views[i].fov;

48 renderLayerInfo.layerProjectionViews[i].subImage.swapchain = m_colorSwapchainInfo.swapchain;

49 renderLayerInfo.layerProjectionViews[i].subImage.imageRect.offset.x = 0;

50 renderLayerInfo.layerProjectionViews[i].subImage.imageRect.offset.y = 0;

51 renderLayerInfo.layerProjectionViews[i].subImage.imageRect.extent.width = static_cast<int32_t>(width);

52 renderLayerInfo.layerProjectionViews[i].subImage.imageRect.extent.height = static_cast<int32_t>(height);

53 renderLayerInfo.layerProjectionViews[i].subImage.imageArrayIndex = i; // Useful for multiview rendering.

54}

55

56// Rendering code to clear the color and depth image views.

57m_graphicsAPI->BeginRendering();

58

59if (m_environmentBlendMode == XR_ENVIRONMENT_BLEND_MODE_OPAQUE) {

60 // VR mode use a background color.

61 m_graphicsAPI->ClearColor(m_colorSwapchainInfo.imageViews[colorImageIndex], 0.17f, 0.17f, 0.17f, 1.00f);

62} else {

63 // In AR mode make the background color black.

64 m_graphicsAPI->ClearColor(m_colorSwapchainInfo.imageViews[colorImageIndex], 0.00f, 0.00f, 0.00f, 1.00f);

65}

66m_graphicsAPI->ClearDepth(m_depthSwapchainInfo.imageViews[depthImageIndex], 1.0f);

67// XR_DOCS_TAG_END_RenderLayer1

68

69// XR_DOCS_TAG_BEGIN_SetupFrameRendering

70m_graphicsAPI->SetRenderAttachments(&m_colorSwapchainInfo.imageViews[colorImageIndex], 1, m_depthSwapchainInfo.imageViews[depthImageIndex], width, height, m_pipeline);

71m_graphicsAPI->SetViewports(&viewport, 1);

72m_graphicsAPI->SetScissors(&scissor, 1);

73

74// Compute the view-projection transforms.

75// All matrices (including OpenXR's) are column-major, right-handed.

76for (uint32_t i = 0; i < viewCount; i++) {

77 XrMatrix4x4f proj;

78 XrMatrix4x4f_CreateProjectionFov(&proj, m_apiType, views[i].fov, nearZ, farZ);

79 XrMatrix4x4f toView;

80 XrVector3f scale1m{ 1.0f, 1.0f, 1.0f };

81 XrMatrix4x4f_CreateTranslationRotationScale(&toView, &views[i].pose.position, &views[i].pose.orientation, &scale1m);

82 XrMatrix4x4f view;

83 XrMatrix4x4f_InvertRigidBody(&view, &toView);

84 XrMatrix4x4f_Multiply(&cameraConstants.viewProj[i], &proj, &view);

85}

86// XR_DOCS_TAG_END_SetupFrameRendering

87

88// XR_DOCS_TAG_BEGIN_CallRenderCuboid

89renderCuboidIndex = 0;

90// Draw a floor. Scale it by 2 in the X and Z, and 0.1 in the Y,

91RenderCuboid({{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, -m_viewHeightM, 0.0f}}, {2.0f, 0.1f, 2.0f}, {0.4f, 0.5f, 0.5f});

92// Draw a "table".

93RenderCuboid({{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, -m_viewHeightM + 0.9f, -0.7f}}, {1.0f, 0.2f, 1.0f}, {0.6f, 0.6f, 0.4f});

94// XR_DOCS_TAG_END_CallRenderCuboid

95

96// XR_DOCS_TAG_BEGIN_CallRenderCuboid2

97// Draw some blocks at the controller positions:

98for (int i = 0; i < 2; i++) {

99 if (m_handPoseState[i].isActive) {

100 RenderCuboid(m_handPose[i], {0.02f, 0.04f, 0.10f}, {1.f, 1.f, 1.f});

101 }

102}

103// XR_DOCS_TAG_END_CallRenderCuboid2

104for (int i = 0; i < m_blocks.size(); i++) {

105 auto &thisBlock = m_blocks[i];

106 XrVector3f sc = thisBlock.scale;

107 if (i == m_nearBlock[0] || i == m_nearBlock[1])

108 sc = thisBlock.scale * 1.05f;

109 RenderCuboid(thisBlock.pose, sc, thisBlock.color);

110}

111// XR_DOCS_TAG_BEGIN_RenderLayer2

112m_graphicsAPI->EndRendering();

113

114// Give the swapchain image back to OpenXR, allowing the compositor to use the image.

115XrSwapchainImageReleaseInfo releaseInfo{XR_TYPE_SWAPCHAIN_IMAGE_RELEASE_INFO};

116OPENXR_CHECK(xrReleaseSwapchainImage(m_colorSwapchainInfo.swapchain, &releaseInfo), "Failed to release Image back to the Color Swapchain");

117OPENXR_CHECK(xrReleaseSwapchainImage(m_depthSwapchainInfo.swapchain, &releaseInfo), "Failed to release Image back to the Depth Swapchain");

118

119// Fill out the XrCompositionLayerProjection structure for usage with xrEndFrame().

120renderLayerInfo.layerProjection.layerFlags = XR_COMPOSITION_LAYER_BLEND_TEXTURE_SOURCE_ALPHA_BIT | XR_COMPOSITION_LAYER_CORRECT_CHROMATIC_ABERRATION_BIT;

121renderLayerInfo.layerProjection.space = m_localSpace;

122renderLayerInfo.layerProjection.viewCount = static_cast<uint32_t>(renderLayerInfo.layerProjectionViews.size());

123renderLayerInfo.layerProjection.views = renderLayerInfo.layerProjectionViews.data();

124

125return true;

You can now debug and run your application using Multiview rendering.

6.1.3 GraphicsAPI and Multiview¶

In this section, we describe some of the background changes to the shaders and GraphicsAPI needed to support multiview rendering.

First, add the VK_KHR_MULTIVIEW_EXTENSION_NAME or "VK_KHR_multiview" string to the device extensions list when creating the VkDevice.

At pipeline creation, chain via the next pointer a VkRenderPassMultiviewCreateInfo structure to the VkRenderPassCreateInfo structure when creating the VkRenderPass. Note that there is similar functionality for VK_KHR_dynamic_rendering. The viewMask member specifies the number of views the rasterizer will broadcast to.

bool multiview = false;

VkRenderPassMultiviewCreateInfoKHR multiviewCreateInfo;

#if XR_TUTORIAL_ENABLE_MULTIVIEW

if (pipelineCI.viewMask != 0) {

multiviewCreateInfo.sType = VK_STRUCTURE_TYPE_RENDER_PASS_MULTIVIEW_CREATE_INFO;

multiviewCreateInfo.pNext = nullptr;

multiviewCreateInfo.subpassCount = 1;

multiviewCreateInfo.pViewMasks = &pipelineCI.viewMask;

multiviewCreateInfo.dependencyCount = 0;

multiviewCreateInfo.pViewOffsets = nullptr;

multiviewCreateInfo.correlationMaskCount = 1;

multiviewCreateInfo.pCorrelationMasks = &pipelineCI.viewMask;

multiview = true;

}

#endif

VkRenderPass renderPass{};

VkRenderPassCreateInfo renderPassCI;

renderPassCI.sType = VK_STRUCTURE_TYPE_RENDER_PASS_CREATE_INFO;

renderPassCI.pNext = multiview ? &multiviewCreateInfo : nullptr;

renderPassCI.flags = 0;

renderPassCI.attachmentCount = static_cast<uint32_t>(attachmentDescriptions.size());

renderPassCI.pAttachments = attachmentDescriptions.data();

renderPassCI.subpassCount = 1;

renderPassCI.pSubpasses = &subpassDescription;

renderPassCI.dependencyCount = 1;

renderPassCI.pDependencies = &subpassDependency;

VULKAN_CHECK(vkCreateRenderPass(device, &renderPassCI, nullptr, &renderPass), "Failed to create RenderPass.");

Modify the shader to use gl_ViewIndex and the GL_EXT_multiview GLSL extension.

--- /home/runner/work/OpenXR-Tutorials/OpenXR-Tutorials/Shaders/VertexShader.glsl

+++ /home/runner/work/OpenXR-Tutorials/OpenXR-Tutorials/Chapter6_1_Multiview/ShadersMultiview/VertexShader_VK_MV.glsl

@@ -4,9 +4,10 @@

#version 450

#extension GL_KHR_vulkan_glsl : enable

+#extension GL_EXT_multiview : enable

layout(std140, binding = 0) uniform CameraConstants {

- mat4 viewProj;

- mat4 modelViewProj;

+ mat4 viewProj[2];

+ mat4 modelViewProj[2];

mat4 model;

vec4 color;

vec4 pad1;

@@ -14,14 +15,14 @@

vec4 pad3;

};

layout(std140, binding = 1) uniform Normals {

- vec4 normals[6];

+ vec4 normals[6];

};

layout(location = 0) in vec4 a_Positions;

layout(location = 0) out flat uvec2 o_TexCoord;

layout(location = 1) out flat vec3 o_Normal;

layout(location = 2) out flat vec3 o_Color;

void main() {

- gl_Position = modelViewProj * a_Positions;

+ gl_Position = modelViewProj[gl_ViewIndex] * a_Positions;

int face = gl_VertexIndex / 6;

o_TexCoord = uvec2(face, 0);

o_Normal = (model * normals[face]).xyz;

6.2 Graphics API¶

GraphicsAPI

Note: GraphicsAPI is by no means production-ready code or reflective of good practice with specific APIs. It is there solely to provide working samples in this tutorial, and demonstrate some basic rendering and interaction with OpenXR.

This tutorial uses polymorphic classes; GraphicsAPI_... derives from the base GraphicsAPI class. The derived class is based on your graphics API selection. Include both the header and cpp files for both GraphicsAPI and GraphicsAPI.... GraphicsAPI.h includes the headers and macros needed to set up your platform and graphics API. Below are code snippets that show how to set up the XR_USE_PLATFORM_... and XR_USE_GRAPHICS_API_... macros for your platform along with any relevant headers. In the first code block, there’s also a reference to XR_TUTORIAL_USE_... which we set up the CMakeLists.txt . This tutorial demonstrates all five graphics APIs.

The code below is an example of how you might implement the inclusion and definition of the relevant graphics API header along with the XR_USE_PLATFORM_... and XR_USE_GRAPHICS_API_... macros.

#include <HelperFunctions.h>

#if defined(_WIN32)

#define WIN32_LEAN_AND_MEAN

#define NOMINMAX

#include <Windows.h>

#include <unknwn.h>

#define XR_USE_PLATFORM_WIN32

#if defined(XR_TUTORIAL_USE_D3D11)

#define XR_USE_GRAPHICS_API_D3D11

#endif

#if defined(XR_TUTORIAL_USE_D3D12)

#define XR_USE_GRAPHICS_API_D3D12

#endif

#if defined(XR_TUTORIAL_USE_OPENGL)

#define XR_USE_GRAPHICS_API_OPENGL

#endif

#if defined(XR_TUTORIAL_USE_VULKAN)

#define XR_USE_GRAPHICS_API_VULKAN

#endif

#endif // _WIN32

#if defined(XR_USE_GRAPHICS_API_VULKAN)

#include <vulkan/vulkan.h>

#endif

// OpenXR Helper

#include <OpenXRHelper.h>

When setting up the graphics API core objects, there are things that we need to know from OpenXR in order to create the objects correctly. These could include the version of the graphics API required, referencing a specific GPU, required instance and/or device extension etc. Below are code examples showing how to set up your graphics for OpenXR.

GraphicsAPI_Vulkan::GraphicsAPI_Vulkan(XrInstance m_xrInstance, XrSystemId systemId) {

// Instance

LoadPFN_XrFunctions(m_xrInstance);

XrGraphicsRequirementsVulkanKHR graphicsRequirements{XR_TYPE_GRAPHICS_REQUIREMENTS_VULKAN_KHR};

OPENXR_CHECK(xrGetVulkanGraphicsRequirementsKHR(m_xrInstance, systemId, &graphicsRequirements), "Failed to get Graphics Requirements for Vulkan.");

VkApplicationInfo ai;

ai.sType = VK_STRUCTURE_TYPE_APPLICATION_INFO;

ai.pNext = nullptr;

ai.pApplicationName = "OpenXR Tutorial - Vulkan";

ai.applicationVersion = 1;

ai.pEngineName = "OpenXR Tutorial - Vulkan Engine";

ai.engineVersion = 1;

ai.apiVersion = VK_MAKE_API_VERSION(0, XR_VERSION_MAJOR(graphicsRequirements.minApiVersionSupported), XR_VERSION_MINOR(graphicsRequirements.minApiVersionSupported), 0);

uint32_t instanceExtensionCount = 0;

VULKAN_CHECK(vkEnumerateInstanceExtensionProperties(nullptr, &instanceExtensionCount, nullptr), "Failed to enumerate InstanceExtensionProperties.");

std::vector<VkExtensionProperties> instanceExtensionProperties;

instanceExtensionProperties.resize(instanceExtensionCount);

VULKAN_CHECK(vkEnumerateInstanceExtensionProperties(nullptr, &instanceExtensionCount, instanceExtensionProperties.data()), "Failed to enumerate InstanceExtensionProperties.");

const std::vector<std::string> &openXrInstanceExtensionNames = GetInstanceExtensionsForOpenXR(m_xrInstance, systemId);

for (const std::string &requestExtension : openXrInstanceExtensionNames) {

for (const VkExtensionProperties &extensionProperty : instanceExtensionProperties) {

if (strcmp(requestExtension.c_str(), extensionProperty.extensionName))

continue;

else

activeInstanceExtensions.push_back(requestExtension.c_str());

break;

}

}

VkInstanceCreateInfo instanceCI;

instanceCI.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

instanceCI.pNext = nullptr;

instanceCI.flags = 0;

instanceCI.pApplicationInfo = &ai;

instanceCI.enabledLayerCount = static_cast<uint32_t>(activeInstanceLayers.size());

instanceCI.ppEnabledLayerNames = activeInstanceLayers.data();

instanceCI.enabledExtensionCount = static_cast<uint32_t>(activeInstanceExtensions.size());

instanceCI.ppEnabledExtensionNames = activeInstanceExtensions.data();

VULKAN_CHECK(vkCreateInstance(&instanceCI, nullptr, &instance), "Failed to create Vulkan Instance.");

// Physical Device

uint32_t physicalDeviceCount = 0;

std::vector<VkPhysicalDevice> physicalDevices;

VULKAN_CHECK(vkEnumeratePhysicalDevices(instance, &physicalDeviceCount, nullptr), "Failed to enumerate PhysicalDevices.");

physicalDevices.resize(physicalDeviceCount);

VULKAN_CHECK(vkEnumeratePhysicalDevices(instance, &physicalDeviceCount, physicalDevices.data()), "Failed to enumerate PhysicalDevices.");

VkPhysicalDevice physicalDeviceFromXR;

OPENXR_CHECK(xrGetVulkanGraphicsDeviceKHR(m_xrInstance, systemId, instance, &physicalDeviceFromXR), "Failed to get Graphics Device for Vulkan.");

auto physicalDeviceFromXR_it = std::find(physicalDevices.begin(), physicalDevices.end(), physicalDeviceFromXR);

if (physicalDeviceFromXR_it != physicalDevices.end()) {

physicalDevice = *physicalDeviceFromXR_it;

} else {

std::cout << "ERROR: Vulkan: Failed to find PhysicalDevice for OpenXR." << std::endl;

// Select the first available device.

physicalDevice = physicalDevices[0];

}

// Device

std::vector<VkQueueFamilyProperties> queueFamilyProperties;

uint32_t queueFamilyPropertiesCount = 0;

vkGetPhysicalDeviceQueueFamilyProperties(physicalDevice, &queueFamilyPropertiesCount, nullptr);

queueFamilyProperties.resize(queueFamilyPropertiesCount);

vkGetPhysicalDeviceQueueFamilyProperties(physicalDevice, &queueFamilyPropertiesCount, queueFamilyProperties.data());

std::vector<VkDeviceQueueCreateInfo> deviceQueueCIs;

std::vector<std::vector<float>> queuePriorities;

queuePriorities.resize(queueFamilyProperties.size());

deviceQueueCIs.resize(queueFamilyProperties.size());

for (size_t i = 0; i < deviceQueueCIs.size(); i++) {

for (size_t j = 0; j < queueFamilyProperties[i].queueCount; j++)

queuePriorities[i].push_back(1.0f);

deviceQueueCIs[i].sType = VK_STRUCTURE_TYPE_DEVICE_QUEUE_CREATE_INFO;

deviceQueueCIs[i].pNext = nullptr;

deviceQueueCIs[i].flags = 0;

deviceQueueCIs[i].queueFamilyIndex = static_cast<uint32_t>(i);

deviceQueueCIs[i].queueCount = queueFamilyProperties[i].queueCount;

deviceQueueCIs[i].pQueuePriorities = queuePriorities[i].data();

if (BitwiseCheck(queueFamilyProperties[i].queueFlags, VkQueueFlags(VK_QUEUE_GRAPHICS_BIT)) && queueFamilyIndex == 0xFFFFFFFF && queueIndex == 0xFFFFFFFF) {

queueFamilyIndex = static_cast<uint32_t>(i);

queueIndex = 0;

}

}

uint32_t deviceExtensionCount = 0;

VULKAN_CHECK(vkEnumerateDeviceExtensionProperties(physicalDevice, 0, &deviceExtensionCount, 0), "Failed to enumerate DeviceExtensionProperties.");

std::vector<VkExtensionProperties> deviceExtensionProperties;

deviceExtensionProperties.resize(deviceExtensionCount);

VULKAN_CHECK(vkEnumerateDeviceExtensionProperties(physicalDevice, 0, &deviceExtensionCount, deviceExtensionProperties.data()), "Failed to enumerate DeviceExtensionProperties.");

const std::vector<std::string> &openXrDeviceExtensionNames = GetDeviceExtensionsForOpenXR(m_xrInstance, systemId);

for (const std::string &requestExtension : openXrDeviceExtensionNames) {

for (const VkExtensionProperties &extensionProperty : deviceExtensionProperties) {

if (strcmp(requestExtension.c_str(), extensionProperty.extensionName))

continue;

else

activeDeviceExtensions.push_back(requestExtension.c_str());

break;

}

}

VkPhysicalDeviceFeatures features;

vkGetPhysicalDeviceFeatures(physicalDevice, &features);

VkDeviceCreateInfo deviceCI;

deviceCI.sType = VK_STRUCTURE_TYPE_DEVICE_CREATE_INFO;

deviceCI.pNext = nullptr;

deviceCI.flags = 0;

deviceCI.queueCreateInfoCount = static_cast<uint32_t>(deviceQueueCIs.size());

deviceCI.pQueueCreateInfos = deviceQueueCIs.data();

deviceCI.enabledLayerCount = 0;

deviceCI.ppEnabledLayerNames = nullptr;

deviceCI.enabledExtensionCount = static_cast<uint32_t>(activeDeviceExtensions.size());

deviceCI.ppEnabledExtensionNames = activeDeviceExtensions.data();

deviceCI.pEnabledFeatures = &features;

VULKAN_CHECK(vkCreateDevice(physicalDevice, &deviceCI, nullptr, &device), "Failed to create Device.");

VkCommandPoolCreateInfo cmdPoolCI;

cmdPoolCI.sType = VK_STRUCTURE_TYPE_COMMAND_POOL_CREATE_INFO;

cmdPoolCI.pNext = nullptr;

cmdPoolCI.flags = VK_COMMAND_POOL_CREATE_RESET_COMMAND_BUFFER_BIT;

cmdPoolCI.queueFamilyIndex = queueFamilyIndex;

VULKAN_CHECK(vkCreateCommandPool(device, &cmdPoolCI, nullptr, &cmdPool), "Failed to create CommandPool.");

VkCommandBufferAllocateInfo allocateInfo;

allocateInfo.sType = VK_STRUCTURE_TYPE_COMMAND_BUFFER_ALLOCATE_INFO;

allocateInfo.pNext = nullptr;

allocateInfo.commandPool = cmdPool;

allocateInfo.level = VK_COMMAND_BUFFER_LEVEL_PRIMARY;

allocateInfo.commandBufferCount = 1;

VULKAN_CHECK(vkAllocateCommandBuffers(device, &allocateInfo, &cmdBuffer), "Failed to allocate CommandBuffers.");

vkGetDeviceQueue(device, queueFamilyIndex, queueIndex, &queue);

VkFenceCreateInfo fenceCI{VK_STRUCTURE_TYPE_FENCE_CREATE_INFO};

fenceCI.sType = VK_STRUCTURE_TYPE_FENCE_CREATE_INFO;

fenceCI.pNext = nullptr;

fenceCI.flags = VK_FENCE_CREATE_SIGNALED_BIT;

VULKAN_CHECK(vkCreateFence(device, &fenceCI, nullptr, &fence), "Failed to create Fence.")

uint32_t maxSets = 1024;

std::vector<VkDescriptorPoolSize> poolSizes{

{VK_DESCRIPTOR_TYPE_SAMPLER, 16 * maxSets},

{VK_DESCRIPTOR_TYPE_SAMPLED_IMAGE, 16 * maxSets},

{VK_DESCRIPTOR_TYPE_STORAGE_IMAGE, 16 * maxSets},

{VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER, 16 * maxSets},

{VK_DESCRIPTOR_TYPE_STORAGE_BUFFER, 16 * maxSets}};

VkDescriptorPoolCreateInfo descPoolCI;

descPoolCI.sType = VK_STRUCTURE_TYPE_DESCRIPTOR_POOL_CREATE_INFO;

descPoolCI.pNext = nullptr;

descPoolCI.flags = VK_DESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SET_BIT;

descPoolCI.maxSets = maxSets;

descPoolCI.poolSizeCount = static_cast<uint32_t>(poolSizes.size());

descPoolCI.pPoolSizes = poolSizes.data();

VULKAN_CHECK(vkCreateDescriptorPool(device, &descPoolCI, nullptr, &descriptorPool), "Failed to create DescriptorPool");

}

GraphicsAPI_Vulkan::~GraphicsAPI_Vulkan() {

vkDestroyDescriptorPool(device, descriptorPool, nullptr);

vkDestroyFence(device, fence, nullptr);

vkFreeCommandBuffers(device, cmdPool, 1, &cmdBuffer);

vkDestroyCommandPool(device, cmdPool, nullptr);

vkDestroyDevice(device, nullptr);

vkDestroyInstance(instance, nullptr);

}

void GraphicsAPI_Vulkan::LoadPFN_XrFunctions(XrInstance m_xrInstance) {

OPENXR_CHECK(xrGetInstanceProcAddr(m_xrInstance, "xrGetVulkanGraphicsRequirementsKHR", (PFN_xrVoidFunction *)&xrGetVulkanGraphicsRequirementsKHR), "Failed to get InstanceProcAddr for xrGetVulkanGraphicsRequirementsKHR.");

OPENXR_CHECK(xrGetInstanceProcAddr(m_xrInstance, "xrGetVulkanInstanceExtensionsKHR", (PFN_xrVoidFunction *)&xrGetVulkanInstanceExtensionsKHR), "Failed to get InstanceProcAddr for xrGetVulkanInstanceExtensionsKHR.");

OPENXR_CHECK(xrGetInstanceProcAddr(m_xrInstance, "xrGetVulkanDeviceExtensionsKHR", (PFN_xrVoidFunction *)&xrGetVulkanDeviceExtensionsKHR), "Failed to get InstanceProcAddr for xrGetVulkanDeviceExtensionsKHR.");

OPENXR_CHECK(xrGetInstanceProcAddr(m_xrInstance, "xrGetVulkanGraphicsDeviceKHR", (PFN_xrVoidFunction *)&xrGetVulkanGraphicsDeviceKHR), "Failed to get InstanceProcAddr for xrGetVulkanGraphicsDeviceKHR.");

}

std::vector<std::string> GraphicsAPI_Vulkan::GetInstanceExtensionsForOpenXR(XrInstance m_xrInstance, XrSystemId systemId) {

uint32_t extensionNamesSize = 0;

OPENXR_CHECK(xrGetVulkanInstanceExtensionsKHR(m_xrInstance, systemId, 0, &extensionNamesSize, nullptr), "Failed to get Vulkan Instance Extensions.");

std::vector<char> extensionNames(extensionNamesSize);

OPENXR_CHECK(xrGetVulkanInstanceExtensionsKHR(m_xrInstance, systemId, extensionNamesSize, &extensionNamesSize, extensionNames.data()), "Failed to get Vulkan Instance Extensions.");

std::stringstream streamData(extensionNames.data());

std::vector<std::string> extensions;

std::string extension;

while (std::getline(streamData, extension, ' ')) {

extensions.push_back(extension);

}

return extensions;

}

std::vector<std::string> GraphicsAPI_Vulkan::GetDeviceExtensionsForOpenXR(XrInstance m_xrInstance, XrSystemId systemId) {

uint32_t extensionNamesSize = 0;

OPENXR_CHECK(xrGetVulkanDeviceExtensionsKHR(m_xrInstance, systemId, 0, &extensionNamesSize, nullptr), "Failed to get Vulkan Device Extensions.");

std::vector<char> extensionNames(extensionNamesSize);

OPENXR_CHECK(xrGetVulkanDeviceExtensionsKHR(m_xrInstance, systemId, extensionNamesSize, &extensionNamesSize, extensionNames.data()), "Failed to get Vulkan Device Extensions.");

std::stringstream streamData(extensionNames.data());

std::vector<std::string> extensions;

std::string extension;

while (std::getline(streamData, extension, ' ')) {

extensions.push_back(extension);

}

return extensions;

}

6.3 OpenXR API Layers¶

The OpenXR loader has a layer system that allows OpenXR API calls to pass through a number of optional layers, that add some functionality for the application. These are extremely useful for debugging.

The OpenXR SDK provides two API layers for us to use: In the table below are the layer names and their associated libraries and .json files.

XR_APILAYER_LUNARG_api_dump |

|

|

XR_APILAYER_LUNARG_core_validation |

|

|

XR_APILAYER_LUNARG_api_dump simply logs extra/verbose information to the output describing in more detail what has happened during that API call. XR_APILAYER_LUNARG_core_validation acts similarly to VK_LAYER_KHRONOS_validation in Vulkan, where the layer intercepts the API call and performs validation to ensure conformance with the specification.

Other runtimes and hardware vendors may provide layers that are useful for debugging your XR system and/or application.

Firstly, ensure that you are building the OpenXR provided API layers from the OpenXR-SDK-Source.

# For FetchContent_Declare() and FetchContent_MakeAvailable()

include(FetchContent)

# openxr_loader - From github.com/KhronosGroup

set(BUILD_API_LAYERS

ON

CACHE INTERNAL "Use OpenXR layers"

)

set(BUILD_TESTS

OFF

CACHE INTERNAL "Build tests"

)

FetchContent_Declare(

OpenXR

EXCLUDE_FROM_ALL

DOWNLOAD_EXTRACT_TIMESTAMP

URL_HASH MD5=f52248ef83da9134bec2b2d8e0970677

URL https://github.com/KhronosGroup/OpenXR-SDK-Source/archive/refs/tags/release-1.1.49.tar.gz

SOURCE_DIR

openxr

)

FetchContent_MakeAvailable(OpenXR)

To enable API layers, add the XR_API_LAYER_PATH=<path> environment variable to your project or your system. Something like this: XR_API_LAYER_PATH=<openxr_base>/<build_folder>/src/api_layers/;<another_path>. In this tutorial, the API layer files are found in <cmake_source_folder>/build/_deps/openxr-build/src/api_layers/.

The method described above sets the XR_API_LAYER_PATH environment variable, which overrrides the Operating System’s default API Layers Paths. See OpenXR API Layers - Overriding the Default API Layer Paths. For more information on the default Desktop API Layer Discovery, see OpenXR API Layers - Desktop API Layer Discovery.

The path must point to a folder containing a .json file similar to the one for XR_APILAYER_LUNARG_core_validation, shown below:

{

"file_format_version": "1.0.0",

"api_layer": {

"name": "XR_APILAYER_LUNARG_core_validation",

"library_path": "./XrApiLayer_core_validation.dll",

"api_version": "1.0",

"implementation_version": "1",

"description": "API Layer to perform validation of api calls and parameters as they occur"

}

}

This file points to the library that the loader should use for this API layer.

- To select which API layers we want to use, there are two ways to do this:

Add the

XR_ENABLE_API_LAYERS=<layer_name>environment variable to your project or your system. Something like this:XR_ENABLE_API_LAYERS=XR_APILAYER_LUNARG_test1;XR_APILAYER_LUNARG_test2.When creating the XrInstance, specify the requested API layers in the XrInstanceCreateInfo structure.

6.4 Color Science¶

As OpenXR support both linear and sRGB color spaces for compositing. It is helpful to have a deeper knowledge of color science; especially if you are planning to use sRGB formats and have the OpenXR runtime/compositor do automatic conversions for you.

For more information on color spaces and gamma encoding, see J. Guy Davidson’s video presentation on the subject.

6.5 Multithreaded Rendering¶

Multithreaded rendering with OpenXR is supported and it allows for simple and complex cases ranging from stand-alone application to game/rendering engines. In this tutorial, we have used a single thread to simulate and render our XR application, but modern desktops and mobile devices have multiple cores and thus threads for us to use. Effective use of multiple CPU threads in combination with parallel GPU work can deliver higher performance for the application.

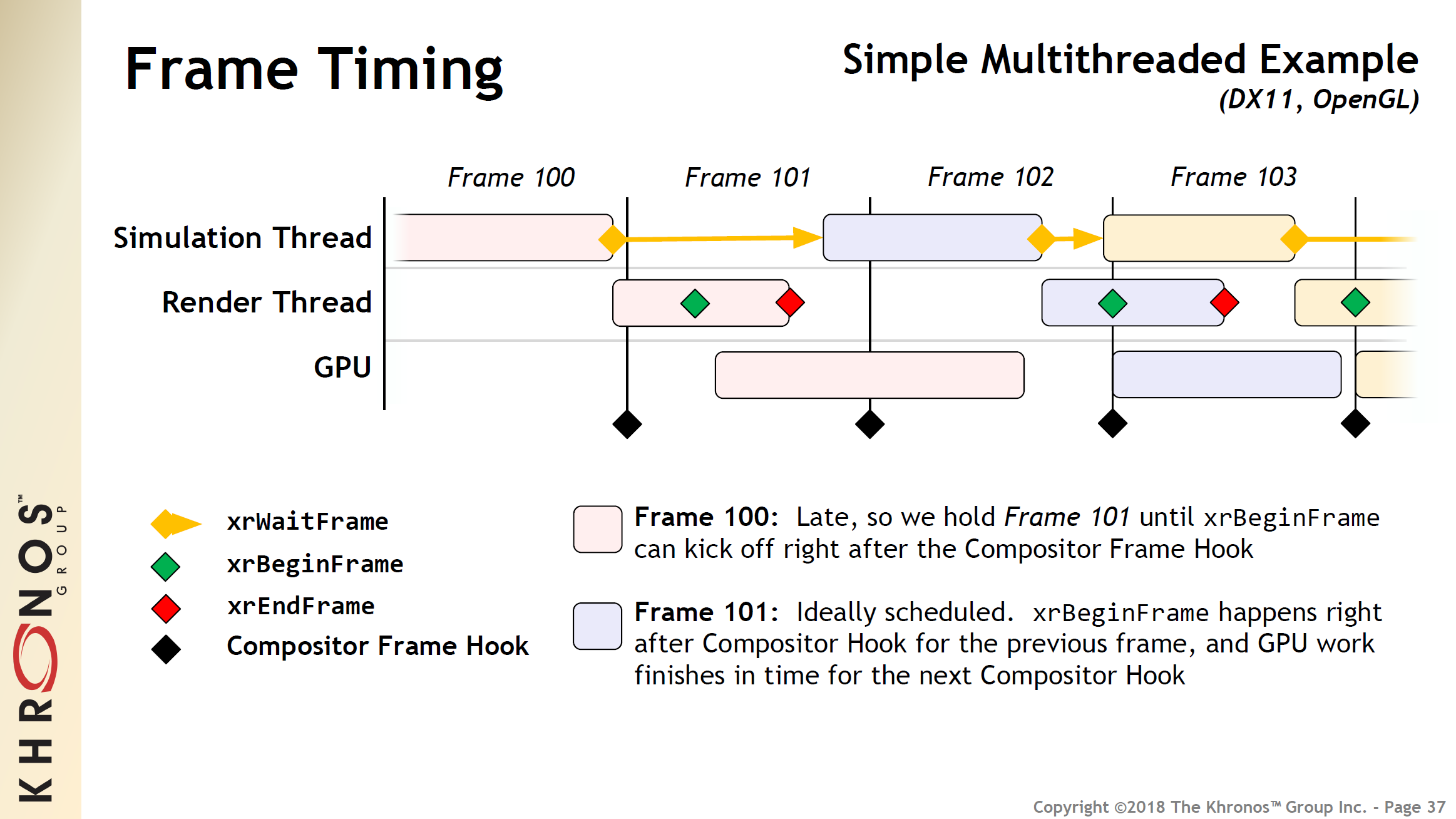

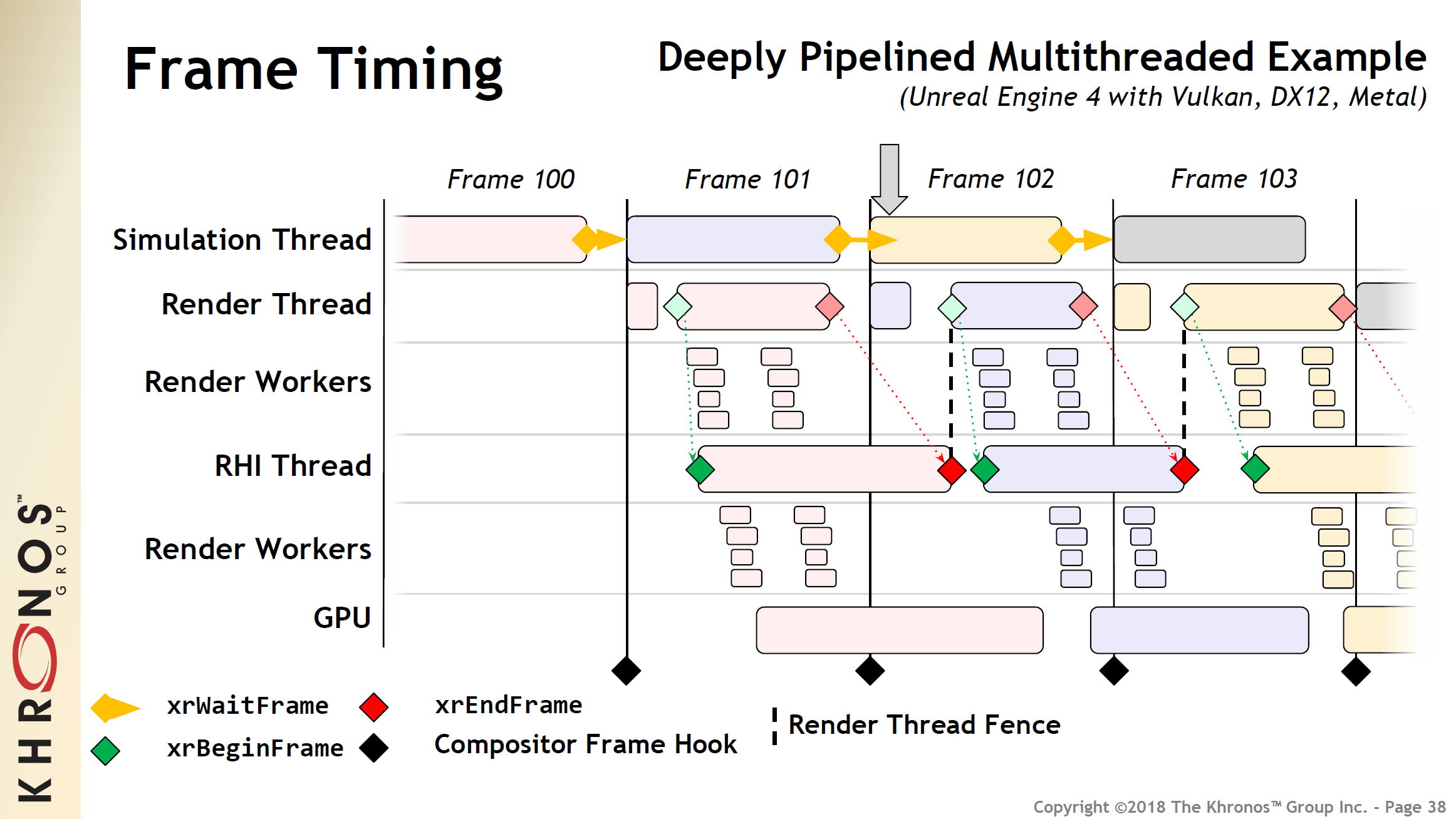

The way to allow OpenXR to interact with multiple threads is to split up the three xr...Frame calls. Currently, xrWaitFrame, xrBeginFrame and xrEndFrame are all called on a single thread. In the multithreaded case, xrWaitFrame stays on the ‘simulation’ thread, whilst xrBeginFrame and xrEndFrame move to a ‘render’ thread. Below are examples of how thread interact with the three xr...Frame calls and the XR compositor frame hook.

Simple:

Complex:

Here’s a link to the PDF to download and a link to the video recording of the presentation.

Note: This talk was given before the release of the OpenXR 1.0 Specification; therefore details may vary or be inaccurate.

6.6 Conclusion¶

In this chapter, we discussed a few of the possible next steps in your OpenXR journey. Be sure to refer back to the OpenXR Specification and look out for updates, both there and here, as the specification develops.

The text of the OpenXR Tutorial is by Roderick Kennedy and Andrew Richards of Simul Software Ltd. The design of the site is by Calland Creative Ltd. The site is overseen by the Khronos OpenXR Working Group. Thanks to all volunteers who tested the site through its development.

Version: v1.0.13