3 Graphics¶

The goal of this chapter is to build an application that creates and clears color and depth buffers and draws some geometry to the views within the scope of OpenXR render loop and to demonstrate its interaction with the Graphics APIs.

In the workspace directory, update the CMakeLists.txt by adding the following CMake code to the end of the file:

add_subdirectory(Chapter3)

Now, create a Chapter3 folder in the workspace directory and into that folder copy the main.cpp and CMakeLists.txt from Chapter2. In the Chapter3/CMakeLists.txt update these lines:

cmake_minimum_required(VERSION 3.28.3)

set(PROJECT_NAME OpenXRTutorialChapter3)

project("${PROJECT_NAME}")

Create a Shaders folder in your root workspace folder, download and put these files in it:

Underneath the SOURCES and HEADERS section, add the following CMake code specifying the location of the shaders:

set(HLSL_SHADERS "../Shaders/VertexShader.hlsl" "../Shaders/PixelShader.hlsl")

Underneath section where specify your Graphics API, add the following CMake code:

# D3D11 and D3D12 HLSL

if(WIN32)

include(fxc_shader)

set_property(

SOURCE ${HLSL_SHADERS} PROPERTY VS_SETTINGS

"ExcludedFromBuild=true"

)

set_source_files_properties(

../Shaders/VertexShader.hlsl PROPERTIES ShaderType "vs"

)

set_source_files_properties(

../Shaders/PixelShader.hlsl PROPERTIES ShaderType "ps"

)

# D3D11: Using Shader Model 5.0

# D3D12: Using Shader Model 5.1

foreach(shadermodel 5_0 5_1)

foreach(FILE ${HLSL_SHADERS})

get_filename_component(FILE_WE ${FILE} NAME_WE)

get_source_file_property(shadertype ${FILE} ShaderType)

fxc_shader(

INPUT

"${CMAKE_CURRENT_SOURCE_DIR}/${FILE}"

OUTPUT

"${CMAKE_CURRENT_BINARY_DIR}/${FILE_WE}_${shadermodel}.cso"

OUTPUT_PDB

"${CMAKE_CURRENT_BINARY_DIR}/${FILE_WE}_${shadermodel}.pdb"

PROFILE

${shadertype}_${shadermodel}

ENTRY_POINT

main

)

# Make our project depend on these files

target_sources(

${PROJECT_NAME}

PRIVATE

"${CMAKE_CURRENT_BINARY_DIR}/${FILE_WE}_${shadermodel}.cso"

)

endforeach()

endforeach()

endif()

3.1 Creating Swapchains¶

As with rendering graphics to a 2D display, OpenXR uses the concept of swapchains. It’s a series of images that are used to present the rendered graphics to display/window/view. There are usually 2 or 3 images in the swapchain to allow the platform to present them smoothly to the user in order to create the illusion of motion within the image.

All graphics APIs have the concept of a swapchain with varying levels of exposure in the API. For OpenXR development, you will not create the API-specific swapchain. Instead, we use OpenXR to create swapchains and the OpenXR compositor, which is part on an XR runtime, to present rendered graphics to the views. XR applications are unique in that often have multiple views that need to be rendered to create the XR experience. Listed below are a couple of scenarios with differing view counts:

1 view - Viewer on a phone, tablet or monitor.

2 views - Stereoscopic headset.

Orthogonal to multiple views is the layering of multiple images. You could, for example, have a background that is a pass-through of your environment, a stereoscopic view of rendered graphics and a quadrilateral overlay of a HUD (Head-up display) or UI elements; all of of which could have different spatial orientations. This layering of views is handled by the XR compositor to composite correctly the layers for each view - that quad overlay might be behind the user, and thus shouldn’t be rendered to the eye views. Composition layers will be discussed later in Chapter 3.2.3.

Firstly, we will update the class in the Chapter3/main.cpp to add the new methods and members. Copy the highlighted code below.

class OpenXRTutorial {

public:

// [...] Constructor and Destructor created in previous chapters.

void Run() {

CreateInstance();

CreateDebugMessenger();

GetInstanceProperties();

GetSystemID();

GetViewConfigurationViews();

CreateSession();

CreateSwapchains();

while (m_applicationRunning) {

PollSystemEvents();

PollEvents();

if (m_sessionRunning) {

// Draw Frame.

}

}

DestroySwapchains();

DestroySession();

DestroyDebugMessenger();

DestroyInstance();

}

private:

// [...] Methods created in previous chapters.

void GetViewConfigurationViews()

{

}

void CreateSwapchains()

{

}

void DestroySwapchains()

{

}

private:

// [...] Member created in previous chapters.

std::vector<XrViewConfigurationType> m_applicationViewConfigurations = {XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO, XR_VIEW_CONFIGURATION_TYPE_PRIMARY_MONO};

std::vector<XrViewConfigurationType> m_viewConfigurations;

XrViewConfigurationType m_viewConfiguration = XR_VIEW_CONFIGURATION_TYPE_MAX_ENUM;

std::vector<XrViewConfigurationView> m_viewConfigurationViews;

struct SwapchainInfo {

XrSwapchain swapchain = XR_NULL_HANDLE;

int64_t swapchainFormat = 0;

std::vector<void *> imageViews;

};

std::vector<SwapchainInfo> m_colorSwapchainInfos = {};

std::vector<SwapchainInfo> m_depthSwapchainInfos = {};

};

We will explore the added methods in the sub-chapters below.

3.1.1 View Configurations¶

The first thing we need to do is get all the view configuration types available to our XR system. A view configuration type is a ‘semantically meaningful set of one or more views for which an application can render images’. (8. View Configurations.) Theres’s both mono and stereo types along others provided by hardware/software vendors.

typedef enum XrViewConfigurationType {

XR_VIEW_CONFIGURATION_TYPE_PRIMARY_MONO = 1,

XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO = 2,

XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO_WITH_FOVEATED_INSET = 1000037000,

XR_VIEW_CONFIGURATION_TYPE_SECONDARY_MONO_FIRST_PERSON_OBSERVER_MSFT = 1000054000,

XR_VIEW_CONFIGURATION_TYPE_PRIMARY_QUAD_VARJO = XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO_WITH_FOVEATED_INSET,

XR_VIEW_CONFIGURATION_TYPE_MAX_ENUM = 0x7FFFFFFF

} XrViewConfigurationType;

We call xrEnumerateViewConfigurations twice, first to get the count of the types for our XR system, and second to fill in the data to the std::vector<XrViewConfigurationType>. Reference: XrViewConfigurationType. Next, we check against a list of view configuration types that are supported by the application and select one.

Now, we need get all of the views available to our view configuration. It is worth just parsing the name of this type: XrViewConfigurationView. We can break the typename up as follows “XrViewConfiguration” - “View”, where it relates to one view in the view configuration, which may contain multiple views. We call xrEnumerateViewConfigurationViews twice, first to get the count of the views in the view configuration, and second to fill in the data to the std::vector<XrViewConfigurationView>.

Add the following code to the GetViewConfigurationViews() method:

// Gets the View Configuration Types. The first call gets the count of the array that will be returned. The next call fills out the array.

uint32_t viewConfigurationCount = 0;

OPENXR_CHECK(xrEnumerateViewConfigurations(m_xrInstance, m_systemID, 0, &viewConfigurationCount, nullptr), "Failed to enumerate View Configurations.");

m_viewConfigurations.resize(viewConfigurationCount);

OPENXR_CHECK(xrEnumerateViewConfigurations(m_xrInstance, m_systemID, viewConfigurationCount, &viewConfigurationCount, m_viewConfigurations.data()), "Failed to enumerate View Configurations.");

// Pick the first application supported View Configuration Type con supported by the hardware.

for (const XrViewConfigurationType &viewConfiguration : m_applicationViewConfigurations) {

if (std::find(m_viewConfigurations.begin(), m_viewConfigurations.end(), viewConfiguration) != m_viewConfigurations.end()) {

m_viewConfiguration = viewConfiguration;

break;

}

}

if (m_viewConfiguration == XR_VIEW_CONFIGURATION_TYPE_MAX_ENUM) {

std::cerr << "Failed to find a view configuration type. Defaulting to XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO." << std::endl;

m_viewConfiguration = XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO;

}

// Gets the View Configuration Views. The first call gets the count of the array that will be returned. The next call fills out the array.

uint32_t viewConfigurationViewCount = 0;

OPENXR_CHECK(xrEnumerateViewConfigurationViews(m_xrInstance, m_systemID, m_viewConfiguration, 0, &viewConfigurationViewCount, nullptr), "Failed to enumerate ViewConfiguration Views.");

m_viewConfigurationViews.resize(viewConfigurationViewCount, {XR_TYPE_VIEW_CONFIGURATION_VIEW});

OPENXR_CHECK(xrEnumerateViewConfigurationViews(m_xrInstance, m_systemID, m_viewConfiguration, viewConfigurationViewCount, &viewConfigurationViewCount, m_viewConfigurationViews.data()), "Failed to enumerate ViewConfiguration Views.");

From the code in Chapter 2.3.1, we modify our assignment of XrSessionBeginInfo ::primaryViewConfigurationType in the PollEvents() to use the class member m_viewConfiguration.

if (sessionStateChanged->state == XR_SESSION_STATE_READY) {

// SessionState is ready. Begin the XrSession using the XrViewConfigurationType.

XrSessionBeginInfo sessionBeginInfo{XR_TYPE_SESSION_BEGIN_INFO};

sessionBeginInfo.primaryViewConfigurationType = m_viewConfiguration;

OPENXR_CHECK(xrBeginSession(m_session, &sessionBeginInfo), "Failed to begin Session.");

m_sessionRunning = true;

}

3.1.2 Enumerate the Swapchain Formats¶

For each runtime, the OpenXR compositor has certain preferred image formats that should be used by the swapchain. When calling xrEnumerateSwapchainFormats, the XrSession and the Graphics API will return an array of API-specific formats ordered by preference. xrEnumerateSwapchainFormats takes a pointer to the first element in an array of int64_t values. The use of int64_t is a simple type cast from a DXGI_FORMAT, GLenum or a VkFormat. The runtime “should support R8G8B8A8 and R8G8B8A8 sRGB formats if possible” (OpenXR Specification 10.1. Swapchain Image Management).

Both Linear and sRGB color spaces are supported and one may have preference over the other. In cases where you are compositing multiple layers, you may wish to use linear color spaces only, as OpenXR’s compositor will perform all blend operations in a linear color space for correctness. For certain runtimes, systems and/or applications, sRGB maybe preferred especially if there’s just a single opaque layer to composite.

If you wish to use an sRGB color format, you must use an API-specific sRGB color format such as DXGI_FORMAT_R8G8B8A8_UNORM_SRGB, GL_SRGB8_ALPHA8 or VK_FORMAT_R8G8B8A8_SRGB for the OpenXR runtime to automatically do sRGB-to-linear color space conversions when reading the image.

We also check that the runtime has a supported depth format so that we can create a depth swapchain. You can check this with xrEnumerateSwapchainFormats. Unfortunately, there are no guarantees with in the OpenXR 1.0 core specification or the XR_KHR_composition_layer_depth extension revision 6 that states runtimes must support depth format for swapchains. Most XR systems and/or run support depth swapchain formats. This will be useful for XR_KHR_composition_layer_depth and AR applications. See Chapter 5.2.

If no swapchain depth format is found, applications should use a depth buffer/texture to render graphics. This depth buffer/texture can not be used with XR_KHR_composition_layer_depth.

Copy the code below into the CreateSwapchains() method:

// Get the supported swapchain formats as an array of int64_t and ordered by runtime preference.

uint32_t formatCount = 0;

OPENXR_CHECK(xrEnumerateSwapchainFormats(m_session, 0, &formatCount, nullptr), "Failed to enumerate Swapchain Formats");

std::vector<int64_t> formats(formatCount);

OPENXR_CHECK(xrEnumerateSwapchainFormats(m_session, formatCount, &formatCount, formats.data()), "Failed to enumerate Swapchain Formats");

if (m_graphicsAPI->SelectDepthSwapchainFormat(formats) == 0) {

std::cerr << "Failed to find depth format for Swapchain." << std::endl;

DEBUG_BREAK;

}

3.1.3 Create the Swapchains¶

We will create an XrSwapchain for each view in the system. First, we will resize our color and depth std::vector<SwapchainInfo> s to match the number of views in the system. Next, we set up a loop to iterate through and create the swapchains and all the image views.

Append the following two code blocks to the CreateSwapchains() method:

//Resize the SwapchainInfo to match the number of view in the View Configuration.

m_colorSwapchainInfos.resize(m_viewConfigurationViews.size());

m_depthSwapchainInfos.resize(m_viewConfigurationViews.size());

for (size_t i = 0; i < m_viewConfigurationViews.size(); i++) {

}

Inside the for loop of the CreateSwapchains() method, add the following code:

SwapchainInfo &colorSwapchainInfo = m_colorSwapchainInfos[i];

SwapchainInfo &depthSwapchainInfo = m_depthSwapchainInfos[i];

// Fill out an XrSwapchainCreateInfo structure and create an XrSwapchain.

// Color.

XrSwapchainCreateInfo swapchainCI{XR_TYPE_SWAPCHAIN_CREATE_INFO};

swapchainCI.createFlags = 0;

swapchainCI.usageFlags = XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_COLOR_ATTACHMENT_BIT;

swapchainCI.format = m_graphicsAPI->SelectColorSwapchainFormat(formats); // Use GraphicsAPI to select the first compatible format.

swapchainCI.sampleCount = m_viewConfigurationViews[i].recommendedSwapchainSampleCount; // Use the recommended values from the XrViewConfigurationView.

swapchainCI.width = m_viewConfigurationViews[i].recommendedImageRectWidth;

swapchainCI.height = m_viewConfigurationViews[i].recommendedImageRectHeight;

swapchainCI.faceCount = 1;

swapchainCI.arraySize = 1;

swapchainCI.mipCount = 1;

OPENXR_CHECK(xrCreateSwapchain(m_session, &swapchainCI, &colorSwapchainInfo.swapchain), "Failed to create Color Swapchain");

colorSwapchainInfo.swapchainFormat = swapchainCI.format; // Save the swapchain format for later use.

// Depth.

swapchainCI.createFlags = 0;

swapchainCI.usageFlags = XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT;

swapchainCI.format = m_graphicsAPI->SelectDepthSwapchainFormat(formats); // Use GraphicsAPI to select the first compatible format.

swapchainCI.sampleCount = m_viewConfigurationViews[i].recommendedSwapchainSampleCount; // Use the recommended values from the XrViewConfigurationView.

swapchainCI.width = m_viewConfigurationViews[i].recommendedImageRectWidth;

swapchainCI.height = m_viewConfigurationViews[i].recommendedImageRectHeight;

swapchainCI.faceCount = 1;

swapchainCI.arraySize = 1;

swapchainCI.mipCount = 1;

OPENXR_CHECK(xrCreateSwapchain(m_session, &swapchainCI, &depthSwapchainInfo.swapchain), "Failed to create Depth Swapchain");

depthSwapchainInfo.swapchainFormat = swapchainCI.format; // Save the swapchain format for later use.

Here, we’ve filled out the XrSwapchainCreateInfo structure. The sampleCount, width and height members were assigned from the related XrViewConfigurationView. We set the createFlags to 0 as we require no constraints or additional functionality. We set the usageFlags to XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_COLOR_ATTACHMENT_BIT requesting that the images are suitable to be read in a shader and to be used as a render target/color attachment. For the depth swapchain, we set the usageFlags to XR_SWAPCHAIN_USAGE_SAMPLED_BIT | XR_SWAPCHAIN_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT as these images will be used as a depth stencil attachments.

DirectX 12

XrSwapchainUsageFlagBits |

Corresponding D3D12 resource flag bits |

XR_SWAPCHAIN_USAGE_COLOR_ATTACHMENT_BIT |

D3D12_RESOURCE_FLAG_ALLOW_RENDER_TARGET |

XR_SWAPCHAIN_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT |

D3D12_RESOURCE_FLAG_ALLOW_DEPTH_STENCIL |

XR_SWAPCHAIN_USAGE_UNORDERED_ACCESS_BIT |

D3D12_RESOURCE_FLAG_ALLOW_UNORDERED_ACCESS |

XR_SWAPCHAIN_USAGE_TRANSFER_SRC_BIT |

ignored |

XR_SWAPCHAIN_USAGE_TRANSFER_DST_BIT |

ignored |

XR_SWAPCHAIN_USAGE_SAMPLED_BIT |

D3D12_RESOURCE_FLAG_DENY_SHADER_RESOURCE |

XR_SWAPCHAIN_USAGE_MUTABLE_FORMAT_BIT |

ignored |

XR_SWAPCHAIN_USAGE_INPUT_ATTACHMENT_BIT_KHR |

ignored |

Then, we set the values for faceCount, arraySize and mipCount. faceCount describes the number of faces in the image and is used for creating cubemap textures. arraySize describes the number of layers in an image. Here, we used 1, as we have separate swapchains per view/eye. If your graphics API supports multiview rendering (See Chapter 6.1.), you could pass 2 and have a 2D image array. mipCount describes the number of texture detail levels; this is useful when using the swapchain image as a sampled image in a shader. Finally, we set the format. Here, we asked our GraphicsAPI_... class to pick a suitable format for the swapchain from the enumerated formats we acquired earlier.

Here is the code for GraphicsAPI::SelectSwapchainFormat():

int64_t GraphicsAPI::SelectColorSwapchainFormat(const std::vector<int64_t> &formats) {

const std::vector<int64_t> &supportSwapchainFormats = GetSupportedColorSwapchainFormats();

const std::vector<int64_t>::const_iterator &swapchainFormatIt = std::find_first_of(formats.begin(), formats.end(),

std::begin(supportSwapchainFormats), std::end(supportSwapchainFormats));

if (swapchainFormatIt == formats.end()) {

std::cout << "ERROR: Unable to find supported Color Swapchain Format" << std::endl;

DEBUG_BREAK;

return 0;

}

return *swapchainFormatIt;

}

int64_t GraphicsAPI::SelectDepthSwapchainFormat(const std::vector<int64_t> &formats) {

const std::vector<int64_t> &supportSwapchainFormats = GetSupportedDepthSwapchainFormats();

const std::vector<int64_t>::const_iterator &swapchainFormatIt = std::find_first_of(formats.begin(), formats.end(),

std::begin(supportSwapchainFormats), std::end(supportSwapchainFormats));

if (swapchainFormatIt == formats.end()) {

std::cout << "ERROR: Unable to find supported Depth Swapchain Format" << std::endl;

DEBUG_BREAK;

return 0;

}

return *swapchainFormatIt;

}

The above code is an excerpt from Common/GraphicsAPI.cpp

The functions each call a pure virtual method called GraphicsAPI::SelectColorSwapchainFormat() and GraphicsAPI::SelectDepthSwapchainFormat() respectively, which each class implements. It returns an array of API-specific formats that the GraphicsAPI library supports.

DirectX 12

const std::vector<int64_t> GraphicsAPI_D3D12::GetSupportedColorSwapchainFormats() {

return {

DXGI_FORMAT_R8G8B8A8_UNORM,

DXGI_FORMAT_B8G8R8A8_UNORM,

DXGI_FORMAT_R8G8B8A8_UNORM_SRGB,

DXGI_FORMAT_B8G8R8A8_UNORM_SRGB};

}

const std::vector<int64_t> GraphicsAPI_D3D12::GetSupportedDepthSwapchainFormats() {

return {

DXGI_FORMAT_D32_FLOAT,

DXGI_FORMAT_D16_UNORM};

}

The above code is an excerpt from Common/GraphicsAPI_D3D12.cpp

Lastly, we called xrCreateSwapchain to create our XrSwapchain, which, if successful, returned XR_SUCCESS and the XrSwapchain was non-null. We copied our swapchain format to our SwapchainInfo::swapchainFormat for later usage.

The same process is repeated for the depth swapchain.

3.1.4 Enumerate Swapchain Images¶

Now that we have created the swapchain, we need to get access to its images. We first call xrEnumerateSwapchainImages to get the count of the images in the swapchain. Next, we set up an array of structures to store the images from the XrSwapchain. In this tutorial, this array of structures, which stores the swapchains images, is stored in the GraphicsAPI_... class. We use the XrSwapchain handle as the key to an std::unordered_map to get the type and the images relating to the swapchain. They are stored inside the GraphicsAPI_... class, because OpenXR will return to the application an array of structures that contain the API-specific handles to the swapchain images. GraphicsAPI::AllocateSwapchainImageData() is a virtual method implemented by each graphics API, which stores the type and resizes an API-specific std::vector<XrSwapchainImage...KHR> and returns a pointer to the first element in that array casting it to a XrSwapchainImageBaseHeader pointer.

The same process is repeated for the depth swapchain.

Copy and append the following code into the for loop of the CreateSwapchains() method.

// Get the number of images in the color/depth swapchain and allocate Swapchain image data via GraphicsAPI to store the returned array.

uint32_t colorSwapchainImageCount = 0;

OPENXR_CHECK(xrEnumerateSwapchainImages(colorSwapchainInfo.swapchain, 0, &colorSwapchainImageCount, nullptr), "Failed to enumerate Color Swapchain Images.");

XrSwapchainImageBaseHeader *colorSwapchainImages = m_graphicsAPI->AllocateSwapchainImageData(colorSwapchainInfo.swapchain, GraphicsAPI::SwapchainType::COLOR, colorSwapchainImageCount);

OPENXR_CHECK(xrEnumerateSwapchainImages(colorSwapchainInfo.swapchain, colorSwapchainImageCount, &colorSwapchainImageCount, colorSwapchainImages), "Failed to enumerate Color Swapchain Images.");

uint32_t depthSwapchainImageCount = 0;

OPENXR_CHECK(xrEnumerateSwapchainImages(depthSwapchainInfo.swapchain, 0, &depthSwapchainImageCount, nullptr), "Failed to enumerate Depth Swapchain Images.");

XrSwapchainImageBaseHeader *depthSwapchainImages = m_graphicsAPI->AllocateSwapchainImageData(depthSwapchainInfo.swapchain, GraphicsAPI::SwapchainType::DEPTH, depthSwapchainImageCount);

OPENXR_CHECK(xrEnumerateSwapchainImages(depthSwapchainInfo.swapchain, depthSwapchainImageCount, &depthSwapchainImageCount, depthSwapchainImages), "Failed to enumerate Depth Swapchain Images.");

Below is an excerpt of the GraphicsAPI::AllocateSwapchainImageData() method and the XrSwapchainImage...KHR structure relating to your chosen graphics API.

DirectX 12

XrSwapchainImageBaseHeader *GraphicsAPI_D3D12::AllocateSwapchainImageData(XrSwapchain swapchain, SwapchainType type, uint32_t count) {

swapchainImagesMap[swapchain].first = type;

swapchainImagesMap[swapchain].second.resize(count, {XR_TYPE_SWAPCHAIN_IMAGE_D3D12_KHR});

return reinterpret_cast<XrSwapchainImageBaseHeader *>(swapchainImagesMap[swapchain].second.data());

}

The above code is an excerpt from Common/GraphicsAPI_D3D12.cpp

swapchainImagesMap is of type std::unordered_map<XrSwapchain, std::pair<SwapchainType, std::vector<XrSwapchainImageD3D12KHR>>. Reference: XrSwapchainImageD3D12KHR.

typedef struct XrSwapchainImageD3D12KHR {

XrStructureType type;

void* XR_MAY_ALIAS next;

ID3D12Resource* texture;

} XrSwapchainImageD3D12KHR;

The above code is an excerpt from openxr/openxr_platform.h

The structure contains a ID3D12Resource * member that is the handle to one of the images in the swapchain.

3.1.5 Create the Swapchain Image Views¶

Now, we create the image views: one per image in the color and depth XrSwapchain s. In this tutorial, we have a GraphicsAPI::ImageViewCreateInfo structure and virtual method GraphicsAPI::CreateImageView() that creates the API-specific objects. We create swapchain image views of both the color and depth swapchains.

Append the following code into the for loop of the CreateSwapchains() method.

// Per image in the swapchains, fill out a GraphicsAPI::ImageViewCreateInfo structure and create a color/depth image view.

for (uint32_t j = 0; j < colorSwapchainImageCount; j++) {

GraphicsAPI::ImageViewCreateInfo imageViewCI;

imageViewCI.image = m_graphicsAPI->GetSwapchainImage(colorSwapchainInfo.swapchain, j);

imageViewCI.type = GraphicsAPI::ImageViewCreateInfo::Type::RTV;

imageViewCI.view = GraphicsAPI::ImageViewCreateInfo::View::TYPE_2D;

imageViewCI.format = colorSwapchainInfo.swapchainFormat;

imageViewCI.aspect = GraphicsAPI::ImageViewCreateInfo::Aspect::COLOR_BIT;

imageViewCI.baseMipLevel = 0;

imageViewCI.levelCount = 1;

imageViewCI.baseArrayLayer = 0;

imageViewCI.layerCount = 1;

colorSwapchainInfo.imageViews.push_back(m_graphicsAPI->CreateImageView(imageViewCI));

}

for (uint32_t j = 0; j < depthSwapchainImageCount; j++) {

GraphicsAPI::ImageViewCreateInfo imageViewCI;

imageViewCI.image = m_graphicsAPI->GetSwapchainImage(depthSwapchainInfo.swapchain, j);

imageViewCI.type = GraphicsAPI::ImageViewCreateInfo::Type::DSV;

imageViewCI.view = GraphicsAPI::ImageViewCreateInfo::View::TYPE_2D;

imageViewCI.format = depthSwapchainInfo.swapchainFormat;

imageViewCI.aspect = GraphicsAPI::ImageViewCreateInfo::Aspect::DEPTH_BIT;

imageViewCI.baseMipLevel = 0;

imageViewCI.levelCount = 1;

imageViewCI.baseArrayLayer = 0;

imageViewCI.layerCount = 1;

depthSwapchainInfo.imageViews.push_back(m_graphicsAPI->CreateImageView(imageViewCI));

}

Each graphics API overrides the virtual function GraphicsAPI::GetSwapchainImage(), which returns an API-specific handle to the image, which is cast to a void *.

DirectX 12

virtual void* GetSwapchainImage(XrSwapchain swapchain, uint32_t index) override {

ID3D12Resource* image = swapchainImagesMap[swapchain].second[index].texture;

D3D12_RESOURCE_STATES state = swapchainImagesMap[swapchain].first == SwapchainType::COLOR ? D3D12_RESOURCE_STATE_RENDER_TARGET : D3D12_RESOURCE_STATE_DEPTH_WRITE;

imageStates[image] = state;

return image;

}

The above code is an excerpt from Common/GraphicsAPI_D3D12.h

For DirectX 3D 12, the ID3D12Resource * returned has of its all subresource states in D3D12_RESOURCE_STATE_RENDER_TARGET for color and D3D12_RESOURCE_STATE_DEPTH_WRITE for depth. This is a requirement of the OpenXR 1.0 D3D12 extension. See: 12.13. XR_KHR_D3D12_enable.

For the color and depth image views, we use the previously stored color/depth image format, that we used when creating their respective swapchains. We store our newly created color and depth image views for the swapchains in separate SwapchainInfo::imageViews instantiations.

3.1.6 Destroy the Swapchain¶

When the main render loop has finished and the application is shutting down, we need to destroy our created XrSwapchain s. This is done by calling xrDestroySwapchain passing the XrSwapchain as a parameter. It will return XR_SUCCESS if successful. At the same time, we destroy all of the image views (both color and depth) by calling GraphicsAPI::DestroyImageView() and freeing all the allocated swapchain image data with GraphicsAPI::FreeSwapchainImageData().

// Per view in the view configuration:

for (size_t i = 0; i < m_viewConfigurationViews.size(); i++) {

SwapchainInfo &colorSwapchainInfo = m_colorSwapchainInfos[i];

SwapchainInfo &depthSwapchainInfo = m_depthSwapchainInfos[i];

// Destroy the color and depth image views from GraphicsAPI.

for (void *&imageView : colorSwapchainInfo.imageViews) {

m_graphicsAPI->DestroyImageView(imageView);

}

for (void *&imageView : depthSwapchainInfo.imageViews) {

m_graphicsAPI->DestroyImageView(imageView);

}

// Free the Swapchain Image Data.

m_graphicsAPI->FreeSwapchainImageData(colorSwapchainInfo.swapchain);

m_graphicsAPI->FreeSwapchainImageData(depthSwapchainInfo.swapchain);

// Destroy the swapchains.

OPENXR_CHECK(xrDestroySwapchain(colorSwapchainInfo.swapchain), "Failed to destroy Color Swapchain");

OPENXR_CHECK(xrDestroySwapchain(depthSwapchainInfo.swapchain), "Failed to destroy Depth Swapchain");

}

We now have swapchains and image views, ready for rendering. Next, we set up the render loop for OpenXR!

3.2 Building a RenderLoop¶

With most of the OpenXR objects now set up, we can now turn our attention to rendering graphics. There are two further OpenXR objects that are needed to render; pertaining to where the user is and what the user sees of the external environment around them. Namely, these are the ‘reference space’ and the ‘environment blend mode’ respectively.

Then, with those final pieces in place, we can look to the RenderFrame() and RenderLayer() code to invoke graphics work on the GPU and present it back to OpenXR and its compositor through the use of the composition layers and within the scope of an XR Frame.

Update the methods and members in the class. Copy the highlighted code:

class OpenXRTutorial {

private:

struct RenderLayerInfo;

public:

// [...] Constructor and Destructor created in previous chapters.

void Run() {

CreateInstance();

CreateDebugMessenger();

GetInstanceProperties();

GetSystemID();

GetViewConfigurationViews();

GetEnvironmentBlendModes();

CreateSession();

CreateReferenceSpace();

CreateSwapchains();

while (m_applicationRunning) {

PollSystemEvents();

PollEvents();

if (m_sessionRunning) {

RenderFrame();

}

}

DestroySwapchains();

DestroyReferenceSpace();

DestroySession();

DestroyDebugMessenger();

DestroyInstance();

}

private:

// [...] Methods created in previous chapters.

void GetViewConfigurationViews()

{

// [...]

}

void CreateSwapchains()

{

// [...]

}

void DestroySwapchains()

{

// [...]

}

void GetEnvironmentBlendModes()

{

}

void CreateReferenceSpace()

{

}

void DestroyReferenceSpace()

{

}

void RenderFrame()

{

}

bool RenderLayer(RenderLayerInfo& renderLayerInfo)

{

}

private:

// [...] Members created in previous chapters.

std::vector<XrViewConfigurationType> m_applicationViewConfigurations = {XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO, XR_VIEW_CONFIGURATION_TYPE_PRIMARY_MONO};

std::vector<XrViewConfigurationType> m_viewConfigurations;

XrViewConfigurationType m_viewConfiguration = XR_VIEW_CONFIGURATION_TYPE_MAX_ENUM;

std::vector<XrViewConfigurationView> m_viewConfigurationViews;

struct SwapchainInfo {

XrSwapchain swapchain = XR_NULL_HANDLE;

int64_t swapchainFormat = 0;

std::vector<void *> imageViews;

};

std::vector<SwapchainInfo> m_colorSwapchainInfos = {};

std::vector<SwapchainInfo> m_depthSwapchainInfos = {};

std::vector<XrEnvironmentBlendMode> m_applicationEnvironmentBlendModes = {XR_ENVIRONMENT_BLEND_MODE_OPAQUE, XR_ENVIRONMENT_BLEND_MODE_ADDITIVE};

std::vector<XrEnvironmentBlendMode> m_environmentBlendModes = {};

XrEnvironmentBlendMode m_environmentBlendMode = XR_ENVIRONMENT_BLEND_MODE_MAX_ENUM;

XrSpace m_localSpace = XR_NULL_HANDLE;

struct RenderLayerInfo {

XrTime predictedDisplayTime;

std::vector<XrCompositionLayerBaseHeader *> layers;

XrCompositionLayerProjection layerProjection = {XR_TYPE_COMPOSITION_LAYER_PROJECTION};

std::vector<XrCompositionLayerProjectionView> layerProjectionViews;

};

};

3.2.1 Environment Blend Modes¶

Some XR experiences rely on blending the real world and rendered graphics together. Choosing the correct environment blend mode is vital for creating immersion in both virtual and augmented realities.

This blending is done at the final stage after the compositor has flattened and blended all the compositing layers passed to OpenXR at the end of the XR frame.

The enum XrEnvironmentBlendMode describes how OpenXR should blend the rendered view(s) with the external environment behind the screen(s). The values are:

VR:

XR_ENVIRONMENT_BLEND_MODE_OPAQUEis the virtual reality case, where the real world is obscured.AR:

XR_ENVIRONMENT_BLEND_MODE_ADDITIVEorXR_ENVIRONMENT_BLEND_MODE_ALPHA_BLENDare used to composite rendered images with the external environment.

XrEnvironmentBlendMode |

Description |

XR_ENVIRONMENT_BLEND_MODE_OPAQUE |

The composition layers will be displayed with no view of the physical world behind them. The composited image will be interpreted as an RGB image, ignoring the composited alpha channel. |

XR_ENVIRONMENT_BLEND_MODE_ADDITIVE |

The composition layers will be additively blended with the real world behind the display. The composited image will be interpreted as an RGB image, ignoring the composited alpha channel during the additive blending. This will cause black composited pixels to appear transparent. |

XR_ENVIRONMENT_BLEND_MODE_ALPHA_BLEND |

The composition layers will be alpha-blended with the real world behind the display. The composited image will be interpreted as an RGBA image, with the composited alpha channel determining each pixel’s level of blending with the real world behind the display. |

XrEnvironmentBlendMode - Enumerant Descriptions.

typedef enum XrEnvironmentBlendMode {

XR_ENVIRONMENT_BLEND_MODE_OPAQUE = 1,

XR_ENVIRONMENT_BLEND_MODE_ADDITIVE = 2,

XR_ENVIRONMENT_BLEND_MODE_ALPHA_BLEND = 3,

XR_ENVIRONMENT_BLEND_MODE_MAX_ENUM = 0x7FFFFFFF

} XrEnvironmentBlendMode;

The above code is an excerpt from openxr/openxr.h

Copy the following code into the GetEnvironmentBlendModes() method:

// Retrieves the available blend modes. The first call gets the count of the array that will be returned. The next call fills out the array.

uint32_t environmentBlendModeCount = 0;

OPENXR_CHECK(xrEnumerateEnvironmentBlendModes(m_xrInstance, m_systemID, m_viewConfiguration, 0, &environmentBlendModeCount, nullptr), "Failed to enumerate EnvironmentBlend Modes.");

m_environmentBlendModes.resize(environmentBlendModeCount);

OPENXR_CHECK(xrEnumerateEnvironmentBlendModes(m_xrInstance, m_systemID, m_viewConfiguration, environmentBlendModeCount, &environmentBlendModeCount, m_environmentBlendModes.data()), "Failed to enumerate EnvironmentBlend Modes.");

// Pick the first application supported blend mode supported by the hardware.

for (const XrEnvironmentBlendMode &environmentBlendMode : m_applicationEnvironmentBlendModes) {

if (std::find(m_environmentBlendModes.begin(), m_environmentBlendModes.end(), environmentBlendMode) != m_environmentBlendModes.end()) {

m_environmentBlendMode = environmentBlendMode;

break;

}

}

if (m_environmentBlendMode == XR_ENVIRONMENT_BLEND_MODE_MAX_ENUM) {

XR_TUT_LOG_ERROR("Failed to find a compatible blend mode. Defaulting to XR_ENVIRONMENT_BLEND_MODE_OPAQUE.");

m_environmentBlendMode = XR_ENVIRONMENT_BLEND_MODE_OPAQUE;

}

We enumerated the environment blend modes as shown above. This function took a pointer to the first element in an array of XrEnvironmentBlendMode s as multiple environment blend modes could be available to the system. The runtime returned an array ordered by its preference for the system. After we enumerated all the XrEnvironmentBlendMode s, we looped through all of our m_applicationEnvironmentBlendModes to try and find one in our m_environmentBlendModes, if we can’t find one, we default to XR_ENVIRONMENT_BLEND_MODE_OPAQUE, assigning the result to m_environmentBlendMode.

3.2.2 Reference Spaces¶

Now that OpenXR knows what the user should see, we need to tell OpenXR about the user’s viewpoint. This is where the reference space comes in. Copy the following code into the CreateReferenceSpace() method:

// Fill out an XrReferenceSpaceCreateInfo structure and create a reference XrSpace, specifying a Local space with an identity pose as the origin.

XrReferenceSpaceCreateInfo referenceSpaceCI{XR_TYPE_REFERENCE_SPACE_CREATE_INFO};

referenceSpaceCI.referenceSpaceType = XR_REFERENCE_SPACE_TYPE_LOCAL;

referenceSpaceCI.poseInReferenceSpace = {{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, 0.0f, 0.0f}};

OPENXR_CHECK(xrCreateReferenceSpace(m_session, &referenceSpaceCI, &m_localSpace), "Failed to create ReferenceSpace.");

We fill out a XrReferenceSpaceCreateInfo structure. The first member is of type XrReferenceSpaceType, which we will discuss shortly.

When we create the reference space, we need to specify an XrPosef, which will be the origin transform of the space. In this case, we will set XrReferenceSpaceCreateInfo ::poseInReferenceSpace to an “identity” pose - an identity quaternion and a zero position.

If we specify a different pose, the origin received when we poll the space would be offset from the default origin the runtime defines for that space type.

An XrSpace is a frame of reference defined not by its instantaneous values, but semantically by its purpose and relationship to other spaces. The actual, instantaneous position and orientation of an XrSpace is called its pose.

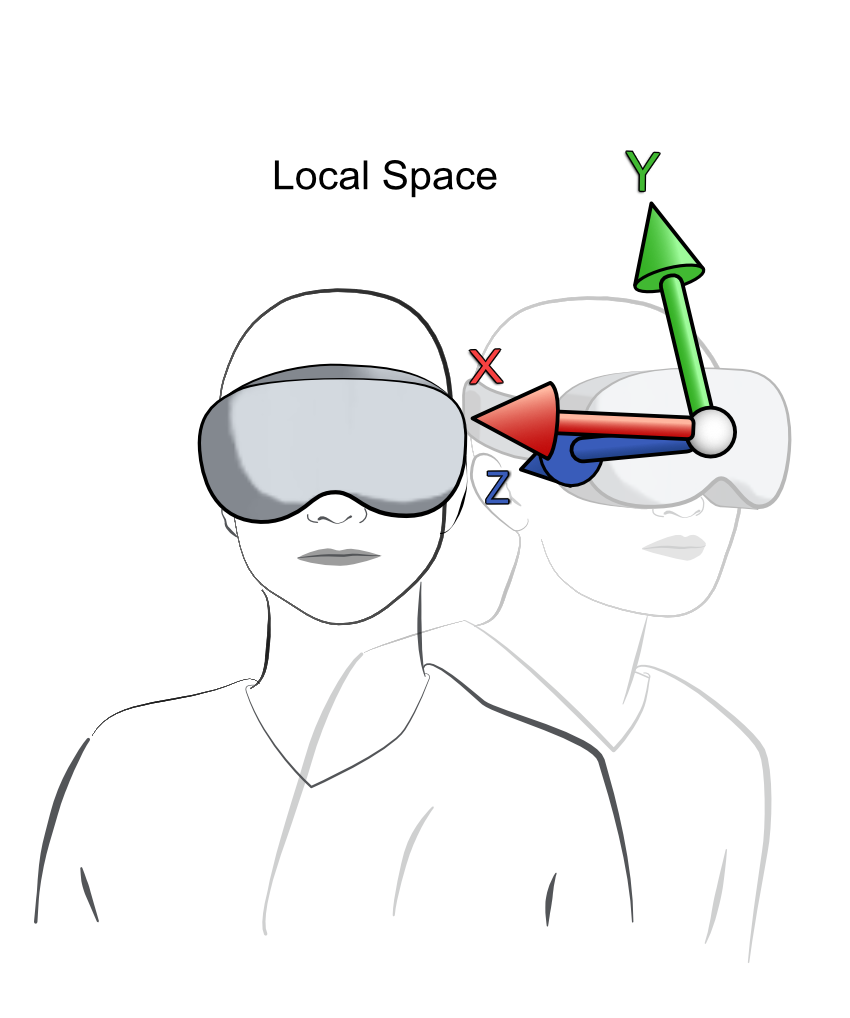

One kind of reference space is view space (XR_REFERENCE_SPACE_TYPE_VIEW), which is oriented with the user’s head, and is useful for user interfaces and many other purposes. We don’t use it to generate view matrices for rendering, because those are often offset from the view space due to stereo rendering.

The View Reference Space uses the view origin (or the centroid of the views in the case of stereo) as the origin of the space. +Y is up, +X is to the right, and -Z is forward. The space is aligned in front of the viewer and it is not gravity aligned. It is most often used for rendering small head-locked content like a HUD (Head-up display).

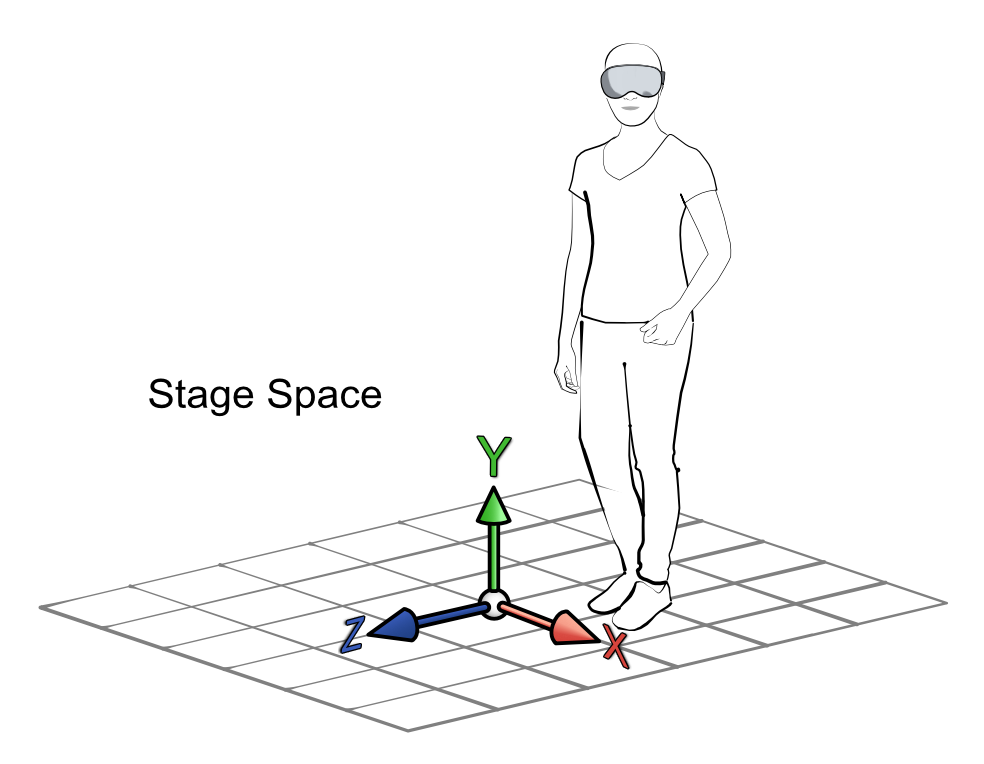

By using XR_REFERENCE_SPACE_TYPE_LOCAL we specify that the views are relative to the XR hardware’s ‘local’ space - either the headset’s starting position or some other world-locked origin.

The Local Reference Space uses an initial location to establish a world-locked, gravity-aligned point as the origin of the space. +Y is up,+X is to the right, and -Z is forward. The origin is also locked for pitch(x) and roll(z). The initial position may be established at the application start up or from a calibrated origin point.

It may be used for rendering seated-scale experiences such as driving or aircraft simulation, where a virtual floor is not required. When recentering, the runtime will queue a XrEventDataReferenceSpaceChangePending structure for the application to process.

Some devices support stage space (XR_REFERENCE_SPACE_TYPE_STAGE); this implies a roomscale space, e.g. with its origin on the floor.

The Stage Reference Space defines a rectangular area that is flat and devoid of obstructions. The origin is defined to be on the floor and at the center of the rectangular area. +Y is up, +X is to the right, and -Z is forward. The origin is axis-aligned to the XZ plane. It is most often used for rendering standing-scale experiences (no bounds) or roomscale experiences (with bounds) where a physical floor is required. When the user is redefining the origin or bounds of the area, the runtime will queue a XrEventDataReferenceSpaceChangePending structure for the application to process.

For more information on reference spaces see the OpenXR Specification.

The default coordinate system in OpenXR is right-handed with +Y up, +X to the right, and -Z forward.

The XR_EXT_local_floor extension bridges the use-case of applications wanting to use a seated-scale experience, but also with a physical floor. Neither XR_REFERENCE_SPACE_TYPE_LOCAL nor XR_REFERENCE_SPACE_TYPE_STAGE truly fits this requirement.

The Local Floor Reference Space establishes a world-locked, gravity-aligned point as the origin of the space. +Y is up, +X is to the right, and -Z is forward. The origin is the same as XR_REFERENCE_SPACE_TYPE_LOCAL in the X and Z coordinates, but not the Y coordinate. See more here: 12.34. XR_EXT_local_floor.

You may wish to call xrEnumerateReferenceSpaces to get all XrReferenceSpaceType s available to the system, before choosing one that is suitable for your application and the user’s environment.

At the end of the application, we should destroy the XrSpace by calling xrDestroySpace. If successful, the function will return XR_SUCCESS. Copy the following code into the DestroyReferenceSpace() method:

// Destroy the reference XrSpace.

OPENXR_CHECK(xrDestroySpace(m_localSpace), "Failed to destroy Space.")

3.2.3 Rendering a Frame¶

Below is the code needed for rendering a frame in OpenXR. For each frame, we sequence through the three primary functions: xrWaitFrame, xrBeginFrame and xrEndFrame. These functions wrap around our rendering code and communicate to the OpenXR runtime that we are rendering and that we need to synchronize with the XR compositor. Copy the following code into RenderFrame():

// Get the XrFrameState for timing and rendering info.

XrFrameState frameState{XR_TYPE_FRAME_STATE};

XrFrameWaitInfo frameWaitInfo{XR_TYPE_FRAME_WAIT_INFO};

OPENXR_CHECK(xrWaitFrame(m_session, &frameWaitInfo, &frameState), "Failed to wait for XR Frame.");

// Tell the OpenXR compositor that the application is beginning the frame.

XrFrameBeginInfo frameBeginInfo{XR_TYPE_FRAME_BEGIN_INFO};

OPENXR_CHECK(xrBeginFrame(m_session, &frameBeginInfo), "Failed to begin the XR Frame.");

// Variables for rendering and layer composition.

bool rendered = false;

RenderLayerInfo renderLayerInfo;

renderLayerInfo.predictedDisplayTime = frameState.predictedDisplayTime;

// Check that the session is active and that we should render.

bool sessionActive = (m_sessionState == XR_SESSION_STATE_SYNCHRONIZED || m_sessionState == XR_SESSION_STATE_VISIBLE || m_sessionState == XR_SESSION_STATE_FOCUSED);

if (sessionActive && frameState.shouldRender) {

// Render the stereo image and associate one of swapchain images with the XrCompositionLayerProjection structure.

rendered = RenderLayer(renderLayerInfo);

if (rendered) {

renderLayerInfo.layers.push_back(reinterpret_cast<XrCompositionLayerBaseHeader *>(&renderLayerInfo.layerProjection));

}

}

// Tell OpenXR that we are finished with this frame; specifying its display time, environment blending and layers.

XrFrameEndInfo frameEndInfo{XR_TYPE_FRAME_END_INFO};

frameEndInfo.displayTime = frameState.predictedDisplayTime;

frameEndInfo.environmentBlendMode = m_environmentBlendMode;

frameEndInfo.layerCount = static_cast<uint32_t>(renderLayerInfo.layers.size());

frameEndInfo.layers = renderLayerInfo.layers.data();

OPENXR_CHECK(xrEndFrame(m_session, &frameEndInfo), "Failed to end the XR Frame.");

The primary structure in use here is the XrFrameState, which contains vital members for timing and rendering such as the predictedDisplayTime member, which is the predicted time that the frame will be displayed to the user, and the shouldRender member, which states whether the application should render any graphics. This last member could change when the application is transitioning into or out of a running session or when the system UI is focused and covering the application.

typedef struct XrFrameState {

XrStructureType type;

void* XR_MAY_ALIAS next;

XrTime predictedDisplayTime;

XrDuration predictedDisplayPeriod;

XrBool32 shouldRender;

} XrFrameState;

The above code is an excerpt from openxr/openxr.h

xrWaitFrame, xrBeginFrame and xrEndFrame must wrap around all the rendering in the XR frame and must be called in that sequence. xrWaitFrame provides to the application the information for the frame, which we’ve discussed above. xrWaitFrame will throttle the frame loop to synchronize the frame submissions with the display. Next, xrBeginFrame should be called just before executing any GPU work for the frame. When calling xrEndFrame, we need to pass an XrFrameEndInfo structure to that function. We assign XrFrameState ::predictedDisplayTime to XrFrameEndInfo ::displayTime. It should be noted that we can modify this value during the frame. Next, we assign to XrFrameEndInfo ::environmentBlendMode our selected blend mode. Last, we assign the count of and a pointer to an std::vector<XrCompositionLayerBaseHeader *> which is a member of our RenderLayerInfo struct. Reference: XrCompositionLayerBaseHeader. These Composition Layers are assembled by the OpenXR compositor to create the final images.

typedef struct XrFrameEndInfo {

XrStructureType type;

const void* XR_MAY_ALIAS next;

XrTime displayTime;

XrEnvironmentBlendMode environmentBlendMode;

uint32_t layerCount;

const XrCompositionLayerBaseHeader* const* layers;

} XrFrameEndInfo;

The above code is an excerpt from openxr/openxr.h

XrCompositionLayerBaseHeader is the base structure from which all other XrCompositionLayer... types extend. They describe the type of layer to be composited along with the relevant information. If we have rendered any graphics within this frame, we cast the memory address our XrCompositionLayer... structure to an XrCompositionLayerBaseHeader pointer and push it into std::vector<XrCompositionLayerBaseHeader *>, all of these variables are found within our RenderLayerInfo struct. These will be assigned in our XrFrameEndInfo structure.

typedef struct XR_MAY_ALIAS XrCompositionLayerBaseHeader {

XrStructureType type;

const void* XR_MAY_ALIAS next;

XrCompositionLayerFlags layerFlags;

XrSpace space;

} XrCompositionLayerBaseHeader;

The above code is an excerpt from openxr/openxr.h

Below is a table of the XrCompositionLayer... types provided by the OpenXR 1.0 Core Specification and XR_KHR_composition_layer_... extensions.

Extension |

Structure |

Description |

OpenXR 1.0 Core Specification |

It’s used for rendering 3D Graphical elements. |

|

OpenXR 1.0 Core Specification |

It’s used for rendering 2D or GUI elements. |

|

It’s used for rendering Environment Cubemps. |

||

It allows the submission of a depth image with the projection layer for more accurate reprojections. |

||

It allows a flat texture to be rendered on inside of a cylinder section - like a curved display. |

||

It’s used for rendering an equirectangular image onto the inside of sphere - like a cubemap. |

||

A color transform applied to an existing composition layer. It could be used to highlight something to the user. |

||

Like XrCompositionLayerEquirectKHR, but uses different parameters similar to XR_KHR_composition_layer_cylinder. |

Other hardware vendor specific extensions relating to XrCompositionLayer... are also in the OpenXR 1.0 specification.

In our RenderLayerInfo struct, we have used a single XrCompositionLayerProjection. The structure describes the XrCompositionLayerFlags, an XrSpace and a count and pointer to an array of XrCompositionLayerProjectionView.

XrCompositionLayerProjectionView describes the XrPosef of the view relative to the reference space, the field of view and to which XrSwapchainSubImage the view relates.

typedef struct XrSwapchainSubImage {

XrSwapchain swapchain;

XrRect2Di imageRect;

uint32_t imageArrayIndex;

} XrSwapchainSubImage;

typedef struct XrCompositionLayerProjectionView {

XrStructureType type;

const void* XR_MAY_ALIAS next;

XrPosef pose;

XrFovf fov;

XrSwapchainSubImage subImage;

} XrCompositionLayerProjectionView;

typedef struct XrCompositionLayerProjection {

XrStructureType type;

const void* XR_MAY_ALIAS next;

XrCompositionLayerFlags layerFlags;

XrSpace space;

uint32_t viewCount;

const XrCompositionLayerProjectionView* views;

} XrCompositionLayerProjection;

The above code is an excerpt from openxr/openxr.h

The compositing of layers can be set on a per-layer basis through the use of the per-texel alpha channel. This is done through the use of the XrCompositionLayerFlags member. Below is a description of these flags.

XrCompositionLayerFlags |

Descriptions |

XR_COMPOSITION_LAYER_CORRECT_CHROMATIC_ABERRATION_BIT |

Enables chromatic aberration correction if not already done. It is planned to be deprecated in OpenXR 1.1 |

XR_COMPOSITION_LAYER_BLEND_TEXTURE_SOURCE_ALPHA_BIT |

Enables the layer texture’s alpha channel for blending |

XR_COMPOSITION_LAYER_UNPREMULTIPLIED_ALPHA_BIT |

States that the color channels have not been pre-multiplied with alpha for transparency |

See more here: 10.6.1. Composition Layer Flags.

Before we call RenderLayer(), we check that the XrSession is active, as we don’t want to needlessly render graphics, and we also check whether OpenXR wants us to render via the use of XrFrameState ::shouldRender.

For more information on OpenXR Frame Submission and Frame Timing see the OpenXR Guide <https://github.com/KhronosGroup/OpenXR-Guide/blob/main/chapters/frame_submission.md>`_.

3.2.4 Rendering Layers¶

From the RenderFrame() function we call RenderLayer(). Here, we locate the views within the reference space, render to our swapchain images and fill out the XrCompositionLayerProjection and std::vector<XrCompositionLayerProjectionView> members in the RenderLayerInfo parameter. Copy the two following blocks of code into the RenderLayer() method:

// Locate the views from the view configuration within the (reference) space at the display time.

std::vector<XrView> views(m_viewConfigurationViews.size(), {XR_TYPE_VIEW});

XrViewState viewState{XR_TYPE_VIEW_STATE}; // Will contain information on whether the position and/or orientation is valid and/or tracked.

XrViewLocateInfo viewLocateInfo{XR_TYPE_VIEW_LOCATE_INFO};

viewLocateInfo.viewConfigurationType = m_viewConfiguration;

viewLocateInfo.displayTime = renderLayerInfo.predictedDisplayTime;

viewLocateInfo.space = m_localSpace;

uint32_t viewCount = 0;

XrResult result = xrLocateViews(m_session, &viewLocateInfo, &viewState, static_cast<uint32_t>(views.size()), &viewCount, views.data());

if (result != XR_SUCCESS) {

XR_TUT_LOG("Failed to locate Views.");

return false;

}

// Resize the layer projection views to match the view count. The layer projection views are used in the layer projection.

renderLayerInfo.layerProjectionViews.resize(viewCount, {XR_TYPE_COMPOSITION_LAYER_PROJECTION_VIEW});

// Per view in the view configuration:

for (uint32_t i = 0; i < viewCount; i++) {

SwapchainInfo &colorSwapchainInfo = m_colorSwapchainInfos[i];

SwapchainInfo &depthSwapchainInfo = m_depthSwapchainInfos[i];

// Acquire and wait for an image from the swapchains.

// Get the image index of an image in the swapchains.

// The timeout is infinite.

uint32_t colorImageIndex = 0;

uint32_t depthImageIndex = 0;

XrSwapchainImageAcquireInfo acquireInfo{XR_TYPE_SWAPCHAIN_IMAGE_ACQUIRE_INFO};

OPENXR_CHECK(xrAcquireSwapchainImage(colorSwapchainInfo.swapchain, &acquireInfo, &colorImageIndex), "Failed to acquire Image from the Color Swapchian");

OPENXR_CHECK(xrAcquireSwapchainImage(depthSwapchainInfo.swapchain, &acquireInfo, &depthImageIndex), "Failed to acquire Image from the Depth Swapchian");

XrSwapchainImageWaitInfo waitInfo = {XR_TYPE_SWAPCHAIN_IMAGE_WAIT_INFO};

waitInfo.timeout = XR_INFINITE_DURATION;

OPENXR_CHECK(xrWaitSwapchainImage(colorSwapchainInfo.swapchain, &waitInfo), "Failed to wait for Image from the Color Swapchain");

OPENXR_CHECK(xrWaitSwapchainImage(depthSwapchainInfo.swapchain, &waitInfo), "Failed to wait for Image from the Depth Swapchain");

// Get the width and height and construct the viewport and scissors.

const uint32_t &width = m_viewConfigurationViews[i].recommendedImageRectWidth;

const uint32_t &height = m_viewConfigurationViews[i].recommendedImageRectHeight;

GraphicsAPI::Viewport viewport = {0.0f, 0.0f, (float)width, (float)height, 0.0f, 1.0f};

GraphicsAPI::Rect2D scissor = {{(int32_t)0, (int32_t)0}, {width, height}};

float nearZ = 0.05f;

float farZ = 100.0f;

// Fill out the XrCompositionLayerProjectionView structure specifying the pose and fov from the view.

// This also associates the swapchain image with this layer projection view.

renderLayerInfo.layerProjectionViews[i] = {XR_TYPE_COMPOSITION_LAYER_PROJECTION_VIEW};

renderLayerInfo.layerProjectionViews[i].pose = views[i].pose;

renderLayerInfo.layerProjectionViews[i].fov = views[i].fov;

renderLayerInfo.layerProjectionViews[i].subImage.swapchain = colorSwapchainInfo.swapchain;

renderLayerInfo.layerProjectionViews[i].subImage.imageRect.offset.x = 0;

renderLayerInfo.layerProjectionViews[i].subImage.imageRect.offset.y = 0;

renderLayerInfo.layerProjectionViews[i].subImage.imageRect.extent.width = static_cast<int32_t>(width);

renderLayerInfo.layerProjectionViews[i].subImage.imageRect.extent.height = static_cast<int32_t>(height);

renderLayerInfo.layerProjectionViews[i].subImage.imageArrayIndex = 0; // Useful for multiview rendering.

// Rendering code to clear the color and depth image views.

m_graphicsAPI->BeginRendering();

if (m_environmentBlendMode == XR_ENVIRONMENT_BLEND_MODE_OPAQUE) {

// VR mode use a background color.

m_graphicsAPI->ClearColor(colorSwapchainInfo.imageViews[colorImageIndex], 0.17f, 0.17f, 0.17f, 1.00f);

} else {

// In AR mode make the background color black.

m_graphicsAPI->ClearColor(colorSwapchainInfo.imageViews[colorImageIndex], 0.00f, 0.00f, 0.00f, 1.00f);

}

m_graphicsAPI->ClearDepth(depthSwapchainInfo.imageViews[depthImageIndex], 1.0f);

And add the following:

m_graphicsAPI->EndRendering();

// Give the swapchain image back to OpenXR, allowing the compositor to use the image.

XrSwapchainImageReleaseInfo releaseInfo{XR_TYPE_SWAPCHAIN_IMAGE_RELEASE_INFO};

OPENXR_CHECK(xrReleaseSwapchainImage(colorSwapchainInfo.swapchain, &releaseInfo), "Failed to release Image back to the Color Swapchain");

OPENXR_CHECK(xrReleaseSwapchainImage(depthSwapchainInfo.swapchain, &releaseInfo), "Failed to release Image back to the Depth Swapchain");

}

// Fill out the XrCompositionLayerProjection structure for usage with xrEndFrame().

renderLayerInfo.layerProjection.layerFlags = XR_COMPOSITION_LAYER_BLEND_TEXTURE_SOURCE_ALPHA_BIT | XR_COMPOSITION_LAYER_CORRECT_CHROMATIC_ABERRATION_BIT;

renderLayerInfo.layerProjection.space = m_localSpace;

renderLayerInfo.layerProjection.viewCount = static_cast<uint32_t>(renderLayerInfo.layerProjectionViews.size());

renderLayerInfo.layerProjection.views = renderLayerInfo.layerProjectionViews.data();

return true;

Our first call is to xrLocateViews, which takes a XrViewLocateInfo structure and return an XrViewState structure and an array of XrView s. This function tells us where the views are in relation to the reference space, as an XrPosef, as well as the field of view, as an XrFovf, for each view; this information is stored in the std::vector<XrView>. The returned XrViewState contains a member of type XrViewStateFlags, which describes whether the position and/or orientation is valid and/or tracked. An XrPosef contain both a vector 3 position and a quaternion orientation, which together describe a transform in 3D space. An XrFovf contain four angles (left, right, up and down), which describe the angular extent of the view’s frustum about the view’s central axis.

The XrViewLocateInfo structure takes a reference space and a display time from our RenderLayerInfo, from which the view poses are calculated, and also takes our XrViewConfigurationType to locate the correct number of views for the system. If we can’t locate the views, we return false from this method.

We resize our std::vector<XrCompositionLayerProjectionView> member in RenderLayerInfo, and for each view, we render our graphics based on the acquired XrView.

The following sections are repeated for each view whilst we are in the loop, which iterates over the views.

We now acquire both a color and depth image from the swapchains to render to by calling xrAcquireSwapchainImage. This returns via a parameter an index, which we can use to index into an array of swapchain images, or in the case of this tutorial the array of structures containing our swapchain images. Next, we call xrWaitSwapchainImage for both swapchains, we do this to avoid writing to images that the OpenXR compositor is still reading from. These calls will block the CPU thread until the swapchain images are available to use. Skipping slightly forward to the end of the rendering for this view, we call xrReleaseSwapchainImage for the color and depth swapchains. These calls hand the swapchain images back to OpenXR for the compositor to use in creating the image for the view. Like with xrBeginFrame and xrEndFrame, the xr...SwapchainImage() functions need to be called in sequence for correct API usage.

After we have waited for the swapchain images, but before releasing it, we fill out the XrCompositionLayerProjectionView associated with the view and render our graphics. First, we quickly get the width and height of the view from the XrViewConfigurationView. We used the same recommendedImageRectWidth and recommendedImageRectHeight values when creating the swapchains. We also create the viewport, scissor, nearZ and farZ values for rendering.

We can now fill out the XrCompositionLayerProjectionView using the pose and fov from the associated XrView. For the XrSwapchainSubImage member, we assign the color swapchain used, the offset and extent of the render area and imageArrayIndex. If you are using multiview rendering and your single swapchain is comprised of 2D Array images, where each subresource layer in the image relates to a view, you can use imageArrayIndex to specify the subresource layer of the image used in the rendering of this view. (See Chapter 6.1).

After filling out the XrCompositionLayerProjectionView structure, we can use this tutorial’s GraphicsAPI to clear the images as a very simple test. We first call GraphicsAPI::BeginRendering() to setup any API-specific objects needed for rendering. Next, we call GraphicsAPI::ClearColor() taking the created color image view from the color swapchain image; note here that we use different clear colors depending on whether our environment blend mode is opaque or otherwise. We also clear our depth image view, which was created from the depth swapchain image, with GraphicsAPI::ClearDepth(). Finally, we call GraphicsAPI::EndRendering() to finish the rendering. This function will submit the work to the GPU and wait for it to be completed.

Now, we have rendered both views and exited the loop.

We fill out the XrCompositionLayerProjection structure and assign our compositing flags of XR_COMPOSITION_LAYER_BLEND_TEXTURE_SOURCE_ALPHA_BIT | XR_COMPOSITION_LAYER_CORRECT_CHROMATIC_ABERRATION_BIT and assign our reference space. We assign to the member viewCount the size of the std::vector<XrCompositionLayerProjectionView> and to the member views a pointer to the first element in the std::vector<XrCompositionLayerProjectionView>. Finally, we return true from the function to state that we have successfully completed our rendering.

We should now have clear colors rendered to each view in your XR system. From here, you can easily expand the graphical complexity of the scene.

3.3 Rendering Cuboids¶

Now that we have a clear color and depth working, we can now start to render geometry in our scene. We will use GraphicsAPI to create vertex, index and uniform/constant buffers along with shaders and a pipeline to render some cuboids representing the floor and a table surface.

Update the methods and members in the class. Copy the highlighted code:

1class OpenXRTutorial {

2private:

3 struct RenderLayerInfo;

4

5public:

6 // [...] Constructor and Destructor created in previous chapters.

7

8 void Run() {

9 CreateInstance();

10 CreateDebugMessenger();

11

12 GetInstanceProperties();

13 GetSystemID();

14

15 GetViewConfigurationViews();

16 GetEnvironmentBlendModes();

17

18 CreateSession();

19 CreateReferenceSpace();

20 CreateSwapchains();

21 CreateResources();

22

23 while (m_applicationRunning) {

24 PollSystemEvents();

25 PollEvents();

26 if (m_sessionRunning) {

27 RenderFrame();

28 }

29 }

30

31 DestroyResources();

32 DestroySwapchains();

33 DestroyReferenceSpace();

34 DestroySession();

35

36 DestroyDebugMessenger();

37 DestroyInstance();

38 }

39

40private:

41 // [...] Methods created in previous chapters.

42

43 void GetViewConfigurationViews()

44 {

45 // [...]

46 }

47 void CreateSwapchains()

48 {

49 // [...]

50 }

51 void DestroySwapchains()

52 {

53 // [...]

54 }

55 void GetEnvironmentBlendModes()

56 {

57 // [...]

58 }

59 void CreateReferenceSpace()

60 {

61 // [...]

62 }

63 void DestroyReferenceSpace()

64 {

65 // [...]

66 }

67 void RenderFrame()

68 {

69 // [...]

70 }

71 bool RenderLayer(RenderLayerInfo& renderLayerInfo)

72 {

73 // [...]

74 }

75 void RenderCuboid(XrPosef pose, XrVector3f scale, XrVector3f color)

76 {

77 }

78 void CreateResources()

79 {

80 }

81 void DestroyResources()

82 {

83 }

84

85private:

86 // [...] Members created in previous chapters.

87

88 std::vector<XrViewConfigurationType> m_applicationViewConfigurations = {XR_VIEW_CONFIGURATION_TYPE_PRIMARY_STEREO, XR_VIEW_CONFIGURATION_TYPE_PRIMARY_MONO};

89 std::vector<XrViewConfigurationType> m_viewConfigurations;

90 XrViewConfigurationType m_viewConfiguration = XR_VIEW_CONFIGURATION_TYPE_MAX_ENUM;

91 std::vector<XrViewConfigurationView> m_viewConfigurationViews;

92

93 struct SwapchainInfo {

94 XrSwapchain swapchain = XR_NULL_HANDLE;

95 int64_t swapchainFormat = 0;

96 std::vector<void *> imageViews;

97 };

98 std::vector<SwapchainInfo> m_colorSwapchainInfos = {};

99 std::vector<SwapchainInfo> m_depthSwapchainInfos = {};

100

101 std::vector<XrEnvironmentBlendMode> m_applicationEnvironmentBlendModes = {XR_ENVIRONMENT_BLEND_MODE_OPAQUE, XR_ENVIRONMENT_BLEND_MODE_ADDITIVE};

102 std::vector<XrEnvironmentBlendMode> m_environmentBlendModes = {};

103 XrEnvironmentBlendMode m_environmentBlendMode = XR_ENVIRONMENT_BLEND_MODE_MAX_ENUM;

104

105 XrSpace m_localSpace = XR_NULL_HANDLE;

106 struct RenderLayerInfo {

107 XrTime predictedDisplayTime;

108 std::vector<XrCompositionLayerBaseHeader *> layers;

109 XrCompositionLayerProjection layerProjection = {XR_TYPE_COMPOSITION_LAYER_PROJECTION};

110 std::vector<XrCompositionLayerProjectionView> layerProjectionViews;

111 };

112

113 float m_viewHeightM = 1.5f;

114

115 void *m_vertexBuffer = nullptr;

116 void *m_indexBuffer = nullptr;

117 void *m_uniformBuffer_Camera = nullptr;

118 void *m_uniformBuffer_Normals = nullptr;

119 void *m_vertexShader = nullptr, *m_fragmentShader = nullptr;

120 void *m_pipeline = nullptr;

121};

To draw our geometry, we will need a simple mathematics library for vectors, matrices and the like. Download this header file and place it in the Common folder under the workspace directory:

In main.cpp, add the following code under the current header include statements:

// include xr linear algebra for XrVector and XrMatrix classes.

#include <xr_linear_algebra.h>

// Declare some useful operators for vectors:

XrVector3f operator-(XrVector3f a, XrVector3f b) {

return {a.x - b.x, a.y - b.y, a.z - b.z};

}

XrVector3f operator*(XrVector3f a, float b) {

return {a.x * b, a.y * b, a.z * b};

}

Now, we will need to set up all of our rendering resources for our scene. This consists of a vertex/index buffer-pair that holds the geometry data for a cube and a uniform/constant buffer large enough to hold multiple instances of our CameraConstants struct. We use GraphicsAPI::CreateBuffer() to create and upload our data to the GPU.

Above void CreateResources() add the following code defining CameraConstants and an array of XrVector4f normals:

struct CameraConstants {

XrMatrix4x4f viewProj;

XrMatrix4x4f modelViewProj;

XrMatrix4x4f model;

XrVector4f color;

XrVector4f pad1;

XrVector4f pad2;

XrVector4f pad3;

};

CameraConstants cameraConstants;

XrVector4f normals[6] = {

{1.00f, 0.00f, 0.00f, 0},

{-1.00f, 0.00f, 0.00f, 0},

{0.00f, 1.00f, 0.00f, 0},

{0.00f, -1.00f, 0.00f, 0},

{0.00f, 0.00f, 1.00f, 0},

{0.00f, 0.0f, -1.00f, 0}};

Copy the following code into CreateResources():

// Vertices for a 1x1x1 meter cube. (Left/Right, Top/Bottom, Front/Back)

constexpr XrVector4f vertexPositions[] = {

{+0.5f, +0.5f, +0.5f, 1.0f},

{+0.5f, +0.5f, -0.5f, 1.0f},

{+0.5f, -0.5f, +0.5f, 1.0f},

{+0.5f, -0.5f, -0.5f, 1.0f},

{-0.5f, +0.5f, +0.5f, 1.0f},

{-0.5f, +0.5f, -0.5f, 1.0f},

{-0.5f, -0.5f, +0.5f, 1.0f},

{-0.5f, -0.5f, -0.5f, 1.0f}};

#define CUBE_FACE(V1, V2, V3, V4, V5, V6) vertexPositions[V1], vertexPositions[V2], vertexPositions[V3], vertexPositions[V4], vertexPositions[V5], vertexPositions[V6],

XrVector4f cubeVertices[] = {

CUBE_FACE(2, 1, 0, 2, 3, 1) // -X

CUBE_FACE(6, 4, 5, 6, 5, 7) // +X

CUBE_FACE(0, 1, 5, 0, 5, 4) // -Y

CUBE_FACE(2, 6, 7, 2, 7, 3) // +Y

CUBE_FACE(0, 4, 6, 0, 6, 2) // -Z

CUBE_FACE(1, 3, 7, 1, 7, 5) // +Z

};

uint32_t cubeIndices[36] = {

0, 1, 2, 3, 4, 5, // -X

6, 7, 8, 9, 10, 11, // +X

12, 13, 14, 15, 16, 17, // -Y

18, 19, 20, 21, 22, 23, // +Y

24, 25, 26, 27, 28, 29, // -Z

30, 31, 32, 33, 34, 35, // +Z

};

m_vertexBuffer = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::VERTEX, sizeof(float) * 4, sizeof(cubeVertices), &cubeVertices});

m_indexBuffer = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::INDEX, sizeof(uint32_t), sizeof(cubeIndices), &cubeIndices});

size_t numberOfCuboids = 2;

m_uniformBuffer_Camera = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::UNIFORM, 0, sizeof(CameraConstants) * numberOfCuboids, nullptr});

m_uniformBuffer_Normals = m_graphicsAPI->CreateBuffer({GraphicsAPI::BufferCreateInfo::Type::UNIFORM, 0, sizeof(normals), &normals});

Now, we will add the code to load and create our shaders with GraphicsAPI::CreateShader(). Copy the following code into CreateResources():

For Direct 3D, add this code to define our vertex and pixel shaders:

if (m_apiType == D3D11) {

std::vector<char> vertexSource = ReadBinaryFile("VertexShader_5_0.cso");

m_vertexShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::VERTEX, vertexSource.data(), vertexSource.size()});

std::vector<char> fragmentSource = ReadBinaryFile("PixelShader_5_0.cso");

m_fragmentShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::FRAGMENT, fragmentSource.data(), fragmentSource.size()});

}

if (m_apiType == D3D12) {

std::vector<char> vertexSource = ReadBinaryFile("VertexShader_5_1.cso");

m_vertexShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::VERTEX, vertexSource.data(), vertexSource.size()});

std::vector<char> fragmentSource = ReadBinaryFile("PixelShader_5_1.cso");

m_fragmentShader = m_graphicsAPI->CreateShader({GraphicsAPI::ShaderCreateInfo::Type::FRAGMENT, fragmentSource.data(), fragmentSource.size()});

}

Now we’ll combine the shaders, the vertex input layout, and the rendering state for drawing a solid cube, into a pipeline object using GraphicsAPI::CreatePipeline(). Add the following code to CreateResources():

GraphicsAPI::PipelineCreateInfo pipelineCI;

pipelineCI.shaders = {m_vertexShader, m_fragmentShader};

pipelineCI.vertexInputState.attributes = {{0, 0, GraphicsAPI::VertexType::VEC4, 0, "TEXCOORD"}};

pipelineCI.vertexInputState.bindings = {{0, 0, 4 * sizeof(float)}};

pipelineCI.inputAssemblyState = {GraphicsAPI::PrimitiveTopology::TRIANGLE_LIST, false};

pipelineCI.rasterisationState = {false, false, GraphicsAPI::PolygonMode::FILL, GraphicsAPI::CullMode::BACK, GraphicsAPI::FrontFace::COUNTER_CLOCKWISE, false, 0.0f, 0.0f, 0.0f, 1.0f};

pipelineCI.multisampleState = {1, false, 1.0f, 0xFFFFFFFF, false, false};

pipelineCI.depthStencilState = {true, true, GraphicsAPI::CompareOp::LESS_OR_EQUAL, false, false, {}, {}, 0.0f, 1.0f};

pipelineCI.colorBlendState = {false, GraphicsAPI::LogicOp::NO_OP, {{true, GraphicsAPI::BlendFactor::SRC_ALPHA, GraphicsAPI::BlendFactor::ONE_MINUS_SRC_ALPHA, GraphicsAPI::BlendOp::ADD, GraphicsAPI::BlendFactor::ONE, GraphicsAPI::BlendFactor::ZERO, GraphicsAPI::BlendOp::ADD, (GraphicsAPI::ColorComponentBit)15}}, {0.0f, 0.0f, 0.0f, 0.0f}};

pipelineCI.colorFormats = {m_colorSwapchainInfos[0].swapchainFormat};

pipelineCI.depthFormat = m_depthSwapchainInfos[0].swapchainFormat;

pipelineCI.layout = {{0, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX},

{1, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX},

{2, nullptr, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::FRAGMENT}};

m_pipeline = m_graphicsAPI->CreatePipeline(pipelineCI);

To destroy the resources, add this code into DestroyResources(), which use the corresponding GraphicsAPI::Destroy...() methods:

m_graphicsAPI->DestroyPipeline(m_pipeline);

m_graphicsAPI->DestroyShader(m_fragmentShader);

m_graphicsAPI->DestroyShader(m_vertexShader);

m_graphicsAPI->DestroyBuffer(m_uniformBuffer_Camera);

m_graphicsAPI->DestroyBuffer(m_uniformBuffer_Normals);

m_graphicsAPI->DestroyBuffer(m_indexBuffer);

m_graphicsAPI->DestroyBuffer(m_vertexBuffer);

With our rendering resources now set up, we can add the code needed for rendering the cuboids. We will set up the RenderCuboid() method, which is a little helper method that renders a cuboid. It also tracks the number of rendered cuboids with renderCuboidIndex. This is used so that we can correctly index into the right section of the uniform/constant buffer for positioning the cuboid and camera.

Above void RenderCuboid(), add the following code:

size_t renderCuboidIndex = 0;

Inside RenderCuboid(), add the following:

XrMatrix4x4f_CreateTranslationRotationScale(&cameraConstants.model, &pose.position, &pose.orientation, &scale);

XrMatrix4x4f_Multiply(&cameraConstants.modelViewProj, &cameraConstants.viewProj, &cameraConstants.model);

cameraConstants.color = {color.x, color.y, color.z, 1.0};

size_t offsetCameraUB = sizeof(CameraConstants) * renderCuboidIndex;

m_graphicsAPI->SetPipeline(m_pipeline);

m_graphicsAPI->SetBufferData(m_uniformBuffer_Camera, offsetCameraUB, sizeof(CameraConstants), &cameraConstants);

m_graphicsAPI->SetDescriptor({0, m_uniformBuffer_Camera, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX, false, offsetCameraUB, sizeof(CameraConstants)});

m_graphicsAPI->SetDescriptor({1, m_uniformBuffer_Normals, GraphicsAPI::DescriptorInfo::Type::BUFFER, GraphicsAPI::DescriptorInfo::Stage::VERTEX, false, 0, sizeof(normals)});

m_graphicsAPI->UpdateDescriptors();

m_graphicsAPI->SetVertexBuffers(&m_vertexBuffer, 1);

m_graphicsAPI->SetIndexBuffer(m_indexBuffer);

m_graphicsAPI->DrawIndexed(36);

renderCuboidIndex++;

From the passed-in pose and scale, we create the model matrix and multiply that with CameraConstants::viewProj to obtain CameraConstants::modelViewProj, the matrix that transforms the vertices in our unit cube from model space into projection space. We apply our pipeline, which contains the shaders and render states. We update the two uniform buffers, one containing cameraConstants for the vertex shader and the other containing the normals for the cuboid. We assign our vertex and index buffers and draw 36 indices.

Now moving to the RenderLayer() method. Under the call to ClearDepth(), and before the call to EndRendering():

m_graphicsAPI->SetRenderAttachments(&colorSwapchainInfo.imageViews[colorImageIndex], 1, depthSwapchainInfo.imageViews[depthImageIndex], width, height, m_pipeline);

m_graphicsAPI->SetViewports(&viewport, 1);

m_graphicsAPI->SetScissors(&scissor, 1);

// Compute the view-projection transform.

// All matrices (including OpenXR's) are column-major, right-handed.

XrMatrix4x4f proj;

XrMatrix4x4f_CreateProjectionFov(&proj, m_apiType, views[i].fov, nearZ, farZ);

XrMatrix4x4f toView;

XrVector3f scale1m{1.0f, 1.0f, 1.0f};

XrMatrix4x4f_CreateTranslationRotationScale(&toView, &views[i].pose.position, &views[i].pose.orientation, &scale1m);

XrMatrix4x4f view;

XrMatrix4x4f_InvertRigidBody(&view, &toView);

XrMatrix4x4f_Multiply(&cameraConstants.viewProj, &proj, &view);

The section sets the color and depth image views as rendering attachments for the output merger/color blend stage to write to. We also set the viewport for the rasterizer to transform from normalized device coordinates to texel space and we set the scissor for the rasterizer to the cut in texel space. Our viewport and scissor cover the whole render area. Next, we compute projection and view matrices and we multiply them together to create a view-projection matrix for CameraConstants::viewProj. Now, add the following code:

renderCuboidIndex = 0;

// Draw a floor. Scale it by 2 in the X and Z, and 0.1 in the Y,

RenderCuboid({{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, -m_viewHeightM, 0.0f}}, {2.0f, 0.1f, 2.0f}, {0.4f, 0.5f, 0.5f});

// Draw a "table".

RenderCuboid({{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, -m_viewHeightM + 0.9f, -0.7f}}, {1.0f, 0.2f, 1.0f}, {0.6f, 0.6f, 0.4f});

Finally, we set renderCuboidIndex to 0 and call RenderCuboid() twice drawing two cuboids. The first is offset by our (arbitrary) view height, to represent a “floor”. We scale it by 2 meters in the horizontal direction and 0.1 meter in the vertical, so it’s flat. With that, we should now have a clear color and two cuboids rendered to each view in your XR system.

3.4 Summary¶

Our XR application now renders graphics to the views and uses the XR compositor to present them correctly. In the next chapter, we will discuss how to use OpenXR to interact with your XR application enabling new experiences in spatial computing.

Below is a download link to a zip archive for this chapter containing all the C++ and CMake code for all platform and graphics APIs.

Version: v1.0.13